In my last blog post, I described a tool (CommonsTool) that I created for uploading art images to Wikimedia Commons. One of the features of that Python script was to create Structured Data in Commons (SDoC) statements about the artwork that was being uploaded, such as "depicts" (P180) and "main subject" (P921) and "digital representation of" (P6243), necessary to "magically" populate the Commons page with extensive metadata about the artwork from Wikidata. The script also added "created" (P170) and "inception" (P571) statements, which are important for providing the required attribution when the work is under copyright.

Structured Data on Commons "depicts" statements

These properties serve important roles, but one of the key purposes of SDoC is to make it possible for potential users of the media item to find it by providing richer metadata about what is depicted in the media. SDoC depict statements go into the data that is indexed by the Commons search engine, which otherwise is primarily dependent on words present in the filename. My CommonsTool script does write one "depicts" statement (that the image depicts the artwork itself) and that's important for the semantics of understanding what the media item represents. However, from the standpoint of searching, that single depicts statement doesn't add much to improve discovery since the artwork title in Wikidata is probably similar to the filename of the media item -- neither of which necessarily describe what is depicted IN the artwork.

Of course, one can add depicts statements manually, and there are also some tools that can be used to help with the process. But if you aspire to add multiple depicts statements to hundreds or thousands of media items, this could be very tedious and time consuming. If we are clever, we can take advantage of the fact that Structured Data in Commons is actually just another instance of a wikibase. So generally any tools that can make it easier to work with a wikibase can also make it easier to work with Wikimedia Commons

In February, I gave a presentation about using VanderBot (a tool that I wrote to write data to Wikidata) to write to any wikibase. As part of that presentation, I put together some information about how to use VanderBot to write statements to SDoC using the Commons API, and how to use the Wikimedia Commons Query Service (WCQS) to acquire data programatically via Python. In this blog post, I will highlight some of the key points about interacting with Commons as a wikibase and link out to the details required to actually do the interacting.

Media file identifiers (M IDs)

Wikimedia Commons media files are assigned a unique identifier that is analogous to the Q IDs used with Wikidata items. They are known as "M IDs" and they are required to interact with the Commons API or the Wikimedia Commons Query Service programmatically as I will describe below.

It is not particularly straightforward to find the M ID for a media file. The easiest way is probably to find the Concept URI link in the left menu of a Commons page, right-click on the link, and then paste it somewhere. The M ID is the last part of that link. Here's an example: https://commons.wikimedia.org/entity/M113161207 . If the M ID for a media file is known, you can load its page using a URL of this form.

If you are automating the upload process as I described in my last post, CommonsTool records the M ID when it uploads the file. I also have a Python function that can be used to get the M ID from the Commons API using the media filename.

Properties and values in Structured Data on Commons come from Wikidata

Structured Data on Commons does not maintain its own system of properties. It exclusively uses properties from Wikidata, identified by P IDs. Similarly, the values of SDoC statements are nearly always Wikidata items identified by Q IDs (with dates being an exception). So one could generally represent a SDoC statement (subject property value) like this:

MID PID QID.

Captions

Captions are a feature of Commons that allows multilingual captions to be applied to media items. They show up under the "File information" tab.

Writing statements to the Commons API with VanderBot

VanderBot uses tabular data (spreadsheets) as a data source when it creates statements in a wikibase. One key piece of required information is the Q ID of the subject item that the statements are about and that is generally the first column in the table. When writing to Commons, the subject M ID is substituted for a Q ID in the table.

Statement values for a particular property are placed in one column in the table. Since all of the values in a column are assumed to be for the same property, the P ID doesn't need to be specified as data in the row. VanderBot just needs to know what P ID is associated with that column and that mapping of column with property is made separately. So at a minimum, to write a single kind of statement to Commons (like Depicts), VanderBot needs only two columns of data (one for the M ID and one for the Q ID of the value of the property).

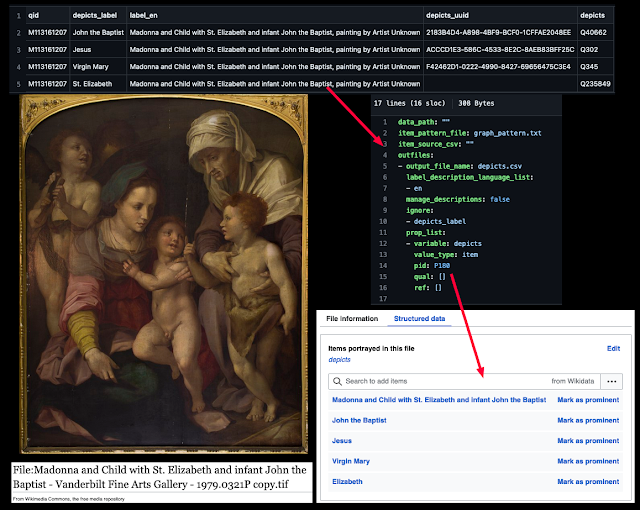

Here is an example of a table with depicts data to be uploaded to Commons by VanderBot:

The qid column contains the subject M ID identifiers (for this media file). The depicts column contains the Q IDs of the values (the things that are depicted in the media item). The other three columns serve the following purposes:

- depicts_label is ignored by the script. It's just a place to put the label of the otherwise opaque Q ID for the depicted item so that a human looking at the spreadsheet has some idea about what's being depicted.

- label_en is the language-tagged caption/wikibase label. VanderBot has an option to either overwrite the existing label in the wikibase with the value in the table or ignore the label column and leave the label in Wikibase the same. In this example, we are not concerning ourselves with editing the captions, so we will use the "ignore" option. But if one wanted to add or update captions, VanderBot could be used for that.

- depicts_uuid stores the unique statement identifier after the statement is created. It is empty for statements that have not yet been uploaded.

I mentioned before that the connection between the property and the column that contains its values was made separately. This mapping is done in a YAML file that describes the columns in the table:

The details of this file structure are given elsewhere, but a few key details are obvious. The depicts_label column is designated as to be ignored. In the properties list, the header for a column is given as value of the variable key, with a value of depicts in this example. That column has item as its value type and P180 as its property.

As a part of the VanderBot workflow, this mapping file is converted into a JSON metadata description file and that file along with the CSV are all that are needed by VanderBot to create the SDoC depicts statements.

If you have used VanderBot to create new items in Wikidata, uploading to Commons is more restrictive than what you are used to. When writing to Wikidata, if the Q ID column for a row in a CSV is empty, Vanderbot will create a new item and if it's not, it edits an existing one. Creating new items directly via the API is not possible in Commons, because new items in the Commons wikibase are only created as a result of media uploads. So when VanderBot interacts with the Commons API, the qid column must contain an existing M ID.

After writing the SDoC statements, they will show up under the "Structured data" tab for the media item, like this:

Notice that the Q IDs for the depicts values have been replaced by their labels.

This is a very abbreviated overview of the process and is intended to make the point that once you have the system set up, all you need to write a large number of SDoC depicts statement is a spreadsheet with column for the M IDs of the media items and a column with the Q IDs of what is depicted in that media item. There are more details with linkouts to how to use VanderBot to write to Commons on a webpage that I made for the Wikibase Working Hour presentation.

Acquiring Structured Data on Commons from the Wikimedia Commons Query Service

A lot of people know about the Wikidata Query Service (WQS), which can be used to query Wikidata using SPARQL. Fewer people know about the Wikimedia Commons Query Service (WCQS) because it's newer and interests a narrower audience. You can access the WCQS at https://commons-query.wikimedia.org/ . It is still under development and is a bit fragile, so it is sometimes down or undergoing maintenance.

If you are working with SDoC, the WCQS is a very effective way to retrieve information about the current state of the structured data. For example, it's a very simple query to discover all media items that depict a particular item, as shown in the example below. There are quite a few examples of queries that you can run to get a feel for how the WCQS might be used.

It is actually quite easy to query the Wikidata Query Service programmatically, but there are additional challenges to using the WCQS

because it requires authentication. I have struggled through reading the developer instructions for accessing the WCQS endpoint via Python and the result is functions and example code that you can use to query the WCQS in your Python scripts. One important warning: the authentication is done by setting a cookie on your computer. So you must be careful not to save this cookie in any location that will be exposed, such as in a GitHub repository. Anyone who gets a copy of this cookie can act as if they were you until the cookie is revoked. To avoid this, the script saves the cookie in your home directory by default.

The code for querying is very simple with the functions I provide:

user_agent = 'TestAgent/0.1 (mailto:username@email.com)' # put your own script name and email address here

endpoint_url = 'https://commons-query.wikimedia.org/sparql'

session = init_session(endpoint_url, retrieve_cookie_string())

wcqs = Sparqler(useragent=user_agent, endpoint=endpoint_url, session=session)query_string = '''PREFIX sdc: <https://commons.wikimedia.org/entity/>

PREFIX wd: <http://www.wikidata.org/entity/>

PREFIX wdt: <http://www.wikidata.org/prop/direct/>

SELECT DISTINCT ?depicts WHERE {

sdc:M113161207 wdt:P180 ?depicts.

}'''data = wcqs.query(query_string)

print(json.dumps(data, indent=2))The query is set in the multi-line string assigned in the line that begins query_string =. One thing to notice is that in WCQS queries, you must define the prefixes wdt: and wd: using PREFIX statements in the query prologue. Those prefixes can be used in WQS queries without making PREFIX statements. In addition, you must define the Commons-specific sdc: prefix and use it with M IDs.

This particular query simply retrieves all of the depicts statements that we created in the example above for M113161207 . The resulting JSON is

[ { "depicts": { "type": "uri", "value": "http://www.wikidata.org/entity/Q103304813" } }, { "depicts": { "type": "uri", "value": "http://www.wikidata.org/entity/Q302" } }, { "depicts": { "type": "uri", "value": "http://www.wikidata.org/entity/Q345" } }, { "depicts": { "type": "uri", "value": "http://www.wikidata.org/entity/Q40662" } }, { "depicts": { "type": "uri", "value": "http://www.wikidata.org/entity/Q235849" } }]

The Q IDs can easily be extracted from these results using a list comprehension:

qids = [ item['depicts']['value'].split('/')[-1] for item in data ]

resulting in this list:

['Q103304813', 'Q302', 'Q345', 'Q40662', 'Q235849']

Comparison with the example table shows the same four Q IDs that we wrote to the API, plus the depicts value for the artwork (Q103304813) that was created by CommonsTool when the media file was uploaded. When adding new depicts statements, having this information about the ones that already exist can be critical to avoid creating duplicate statements.

For more details about how the code works, see the informational web page I made for the Wikibase Working Hour presentation.

Conclusion

I hope that this code will help make it possible to ramp up the rate at which we can add depicts statements to Wikimedia Commons media files. In the Vanderbilt Libraries, we are currently experimenting with using Google Cloud Vision to do object detection and we would like to combine that with artwork title analysis to be able to partially automate the process of describing what is depicted in the Vanderbilt Fine Arts Gallery works whose images have been uploaded to Commons. I plan to report on that work in a future post.