This is the fourth part of a series of posts intended to help you manage and upload data to Wikidata using spreadsheets. It assumes that you have already read the first three posts and that you have tried the "do it yourself" experiments to get a better understanding of how the system works. However, it's possible that the only thing that you want to do is to download data from Wikidata into a spreadsheet, and if that is the case, you could get something out of this post without reading the others. The script that I will describe does require a configuration file whose structure is described in the second post, so you'll either need to read that post (if you like handholding) or read the more technical specifications here to know how to construct or hack that configuration file.

If you are the kind of person who prefers to just look at the specs and play with software, then skip this whole post and go to the documentation for the download script here. Good luck! Come back and do the walk-through below if you can't figure it out.

The latter sections of the post do show how to use the downloaded data to carry out easy-to-implement improvements to item records. Those sections depend on an understanding of the earlier posts. So if that kind of editing beyond simply downloading data interests you, then you should read the whole series of posts.

Configuring and carrying out the download

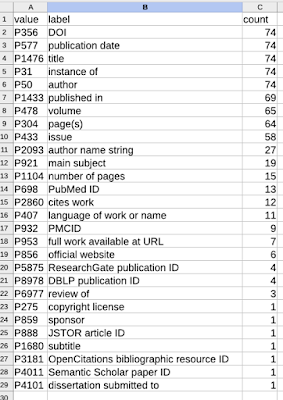

Towards the end of the last post, I created a JSON configuration file for metadata about journal articles. The configuration specified the structure of three CSV files. The first CSV (articles.csv) was intended to have one row per article and contained headers for statements about the following properties: "instance of", DOI, date of publication, English title, journal, volume, page, and issue. The other two CSVs were expected to have multiple rows per article since they contained data about author items (authors.csv) and author name strings (author_strings.csv). Since articles can have one-to-many authors, these two tables could be expected to have zero-to-many rows per article.

For the purposes of testing the download script, you can just use the JSON configuration file as-is. Download it, name it config.json, and put it in a new directory that you can easily navigate to from your home folder. We are going to specify the group of items to be downloaded by designating a graph pattern, so edit the fourth line of the file using a text editor so that it says

"item_pattern_file": "graph_pattern.txt",

You can screen for articles using any kind of graph pattern that you know how to write, but if you don't know what to use, you can use this pattern:

?qid wdt:P50 ?person. # work, author, person

Copy these two lines and save them in a plain text file called graph_pattern.txt in the same directory as the configuration file. The comments after the hash (#) mark will be ignored, so you can leave them off if you want. I chose the first triple pattern (people affiliated with Vanderbilt Libraries) because there is a relatively small number of people involved. You can use some other triple pattern to define the people, but if it designates a large number of people, the file of downloaded journal data may be large. Whatever pattern you use, the variable ?qid must be used to designate the works.

The last thing you need is a copy of the Python script that does the downloading. Go to this page and download the script into the same directory as the other two files.

Open your console software (Terminal on Mac or Command Prompt on Windows) and navigate to the directory where you put the files. Enter

python acquire_wikidata_metadata.py

(or python3 if your installation requires that).

The output should be similar to the screenshot above.

Examining the results

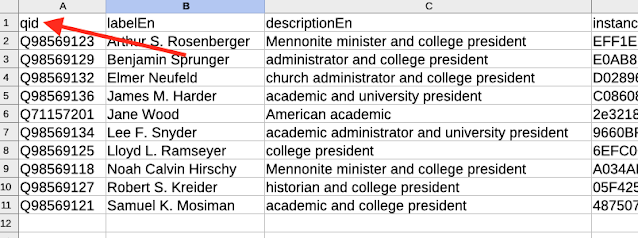

Start by opening the authors.csv file with your spreadsheet software (LibreOffice Calc recommended, Excel OK). This file should be pretty much as expected. There is a label_en column that is there solely to make it easier to interpret the subject Q IDs -- that column is ignored when the spreadsheet is processed by VanderBot. In this case, every row has a value for the author property because we specified works that had author items in the graph pattern we used to screen the works.

The author_strings.csv file should also be close to what you expect, although you might be surprised to see that some rows don't have any author strings. Those are cases where all of the authors of that particular work have been associated with Wikidata items. The script always generates at least one row per subject item because it's very generic. It generally it leaves a blank cell for every statement property that doesn't have a value in case you want to add it later. Because there is only one statement property in this table, a missing value makes the row seem a bit weird because the whole row is then empty except for the Q ID.

When you open the articles.csv file, you may be surprised or annoyed to discover that despite what I said about intending for there to be only one row per article, many articles have two or even more rows. Why is this the case? If you scroll to the right in the table, you will see that in most, if not all, of the cases of multiple rows there is more than one value for instance of. If we were creating an item, we would probably just say that it's an instance of one kind of thing. But there is no rule saying that an item in Wikidata can't be an instance of more that one class. You might think that the article is a scholarly article (Q13442814) and I may think it's an academic journal article (Q18918145) and there is nothing to stop us from both making our assertions.

The underlying reason why we get these multiple rows is because we are using a SPARQL query to retrieve the results. We will see why in the next section. The situation would be even worse if there were more than one property with multiple values. If there were 3 values of instance of for the item and 2 values for the published date, we would get rows with every combination of the two, and end up with 3x2=6 rows for that article. That's unlikely, since I took care to select properties that (other than instance of) are supposed to only have a single value. But sometimes single-value properties are mistakenly given several values and we end up with a proliferation of rows.

An aside on SPARQL

It is not really necessary for you to understand anything about SPARQL to use this script, but if you are interested in understanding this "multiplier" phenomenon, you can read this section. Otherwise, skip to the next section.

Let's start by looking at the page of Carl H. Johnson, a researcher at Vanderbilt (Q28530058).

As I'm writing this (2021-03-16), we can see that Carl is listed as having two occupations: biologist and researcher. That is true, his work involves both of those things. He is also listed as having been educated at UT Austin and Stanford. That is also true, he went to UT Austin as an undergrad and Stanford as a grad student. We can carry out the following SPARQL query to ask about Carl's occupation and education.

?item wdt:P106 ?occupation.

?item wdt:P69 ?educatedAt.

BIND(wd:Q28530058 AS ?item)

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

You can run the query yourself here, although if the information about Carl has changed since I wrote this post, you could get different results.

The last line of the query ("SERVICE...") is some "magic" that the Wikidata Query service does to automatically generate labels for variables. If you are asking about a variable named "?x" and you also ask about the variable "?xLabel", with the "magic" line the Query Service will automatically generate "?xLabel" for you even if you don't define it as part of the graph pattern. I've used this method to generate labels for the three variables I'm asking about in the first line: ?item, ?occupation, and ?educatedAt.

The second and third lines of the query:

?item wdt:P69 ?educatedAt.

are the ones that are actually important. They restrict the value of the variable ?occupation to be the occupation (P106) of the item and restrict the value of the variable ?educatedAt to be the place where the item was educated at (P69).

The fourth line just forces the item to be Carl H. Johnson (Q28530058). If we left that line out, we would get thousands or millions of results: anyone in Wikidata who had an occupation and was educated. (Actually, the query would probably time out. Try it and see what happens if you want.)

So here's what happens when we run the query:

When we carry out a SPARQL query, we are asking the question: what combinations of variable values satisfy the graph pattern that we have specified? The query binds those combinations to the variables and then displays the combinations in the results. If we think about these combinations, we can see they all satisfy the pattern that we required:

- Carl has occupation researcher and went to school at UT Austin.

- Carl has occupation researcher and went to school at Stanford.

- Carl has occupation biologist and went to school at UT Austin.

- Carl has occupation biologist and went to school at Stanford.

There are four ways we can bind values to the variables that are true and satisfy the pattern we required. We cannot ask SPARQL to read our mind and guess there was a special combination that we intended, or that there was one combination that we were more interested in than another.

This behavior sometimes produces results for SPARQL queries that seem unexpected because you get more results than you intend. But if you ask yourself what you really required in your graph pattern, you can usually figure out why you got the result that you did.

Restricting the combinations of values in the table

If you paid close attention to the output of the script, you will have noticed that for each of the three CSVs it said that there were no pre-existing CSVs. After the script runs the SPARQL query to collect the data from Wikidata, it tries to open the files. If it can't open the files, it creates new files and saves all of the combinations of values that it found. However, if the files already exist, it compares the data from the query to the data already in the CSV and ignores combinations of values that don't match what's already there.

That means that if we are annoyed about all of the possible combinations of values initially written to the table, we can delete lines that contain combinations that we don't care about. For example, if one row of the table says that the article is a scholarly article (Q13442814) and another says its an academic journal article (Q18918145), I can delete the scholarly article row and only pay attention to row containing the statement that it is an academic journal article. In future downloads, the scholarly article assertion will be ignored by the script. It is a pain to have to manually delete the duplicate lines, but once you've done so, you shouldn't have to do it again if you try downloading more data later. You will have only a single line to deal with per existing item.

The situation is actually a bit more complicated than I just described. If you are interested in the details of how the script screens combinations of variables that it gets from the Query Service, you can look at the comments in the script starting in line 510. If not, you can assume that the script does the best it can and usually does the screening pretty well. It is still good to examine the CSV visually after doing a fresh download to make sure nothing weird happened.

Repeating the download to pick up new data

Based on what I said in the previous section, you should have noticed that you can run this script repeatedly if you want to pick up new data that has been added to the items since the last time you ran the script. That means that if you are adding data to the downloaded CSV as a way to make additions to Wikidata via the API, you first can check for updated information to make sure that you don't accidentally add duplicate statements when you use a stale CSV. VanderBot assumes that when you fill in empty cells, that represents new data to be written. So you or someone else has actually made the same new statement using the Web interface since the last time you ran VanderBot to do an upload, you risk creating duplicate statements.

There are several important things that you need to keep in mind about updating existing CSVs prior to adding and uploading new data:

1. The screening to remove duplicate rows is only done for preexisting items. Any new items downloaded in the update will need to be visually screened by a human for duplicates. It doesn't really hurt anything if you leave the duplicate rows -- all of their statements and references are preexisting and will have identifiers, so VanderBot will ignore them anyway. But you will probably eventually want to clean up the duplicates to make the spreadsheet easier to use in the future.

2. If there is only a single combination of values for an item (i.e. only a single row), the script will automatically replace any changed values with the new ones regardless of the preexisting state of that row. The screening of rows against the existing spreadsheet only happens when there are two rows with the same Q ID. So if somebody changed the wonderful, correct value that you have in your spreadsheet to something icky and wrong, running the update will change your local copy of the data in the spreadsheet to their icky and wrong value. On the other hand, if they have fixed an error and turned your data into wonderful, correct data, that will be changed in your local copy as well. The point here is that the script is dumb and cannot tell the difference between vandalism and crowd-sourced improvements of your local data. It just always updates your local data when there aren't duplicate rows. In a later post, we will talk about a system to detect changes before downloading them, so that you can make a decision about whether to allow the change to be made to your local copy of the data (i.e. the CSV).

3. If you have enabled VanderBot to write the labels and descriptions of existing items (using the --update option), it is very important that you download fresh values prior to using a stale CSV for writing data to the API. If you do not, then you will effectively revert any recently changed labels and descriptions back to whatever they were the last time you downloaded data or wrote to the API with that CSV. That would be extremely irritating to anyone (including YOU) who put a lot of work into improving labels and descriptions using the web interface and then had them all changed by the VanderBot script back to what they were before. So be careful!

Making additions to the downloaded data and pushing them to the API: Low hanging fruit

If you use this script to download existing data, it will not take you very long to realize that a lot of the data in Wikidata is pretty terrible. There are several common ways that data in Wikidata are terrible, and VanderBot can help you to improve the data with a lot less effort than doing a lot of manual editing using the web interface.

Changing a lot of descriptions at once

Many items in Wikidata were created by bots that had limited information about the items, and limited abilities to collect the information they were missing. The end result is that descriptions are often very poor. A very common example is describing a person as a "researcher". I believe that this happens because the person is an author of a research article, and since the bot knows nothing else about the person, it describes them as a "researcher". Since we are screening by a SPARQL query that establishes some criteria about the items, that criterion often will allow us to provide a better description. For example, if we are screening people by requiring that they be faculty in a chemistry department, and who have published academic research articles, we can safely improve their descriptions by calling them "chemistry researchers".

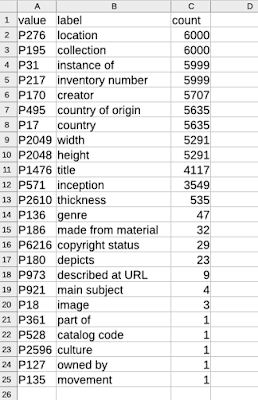

In the case of our example, there is an even more obvious problem: many of the items have no description at all. There is a very easy solution, since we have the instance of (P31) information about the items. All of the items in the screenshot above that are missing descriptions are instances of Q13442814.

I used the script (discussed in the previous post) for downloading information about values of statement properties to summarize all of the P31 values for the items in this group. So I know that all of these items with missing descriptions are instances of scholarly article. There may be better descriptions for those items, but at this point "scholarly article" is a much better description than no description at all.

One might argue that we aren't actually adding information to the items, given that our proposed description is simply re-stating the P31 value. That may be true, but the description is important because it shows up in the search results for an item, and the P31 value does not. In Wikidata, descriptions also play an important role in disambiguating items that have the same label, so it's best for all items to have a description.

I am going to fix all of these descriptions at once by simply pasting the text "scholarly article" in the description column for these items, then running VanderBot with the --update option set to allow. If you have not read the earlier posts, be aware that prior to writing to the API, you will need to create a metadata description file for the CSVs (discussed in the second post) and also download a copy of VanderBot from here into the directory where the CSV files are located. Run the API upload script using the command

python vanderbot.py --update allow --log log.txt

After it finished running, I deleted the three CSV files. After a little while I ran the download script again to see how things in Wikidata had changed. The results are in the screenshot above. (Note: there is a delay between when data are written to the API and when they are available at the Query Service, so the changes won't necessarily show up immediately. It can take between a few seconds and up to an hour for the changes to be transferred to the Query Service.) I was able to improve the descriptions of 23 items by about 30 seconds of copying and pasting. That would have probably taken me at least 10 or 15 minutes if I had looked up each item using the web interface and entered those descriptions manually.

Changing a lot of labels at once

Another situation where I can make a large number of improvements with very little effort is adding people item labels for other languages. Most names of people will be represented in the same way across all languages that use the Latin character set. So I can easily improve label coverage in non-English languages by just copying the English names and using them as labels in the other languages. This would be extremely labor-intensive if you had to look up each item and do the copying and pasting one item at a time. However, when the labels are in spreadsheet form, I can easily copy an entire column and paste it into another column.

In our Vanderbilt Fine Arts Gallery WikiProject, we put in a lot of work on either disambiguating artist name strings against Wikidata items, or creating new items for artists that weren't already there. As an end result, we now have a list of 1325 artists whose works are included in the gallery collection. I can use that list of 1325 Q IDs as a way to define a category of items to be included in a download using the acquire_wikidata_metadata.py script. To set up the download, I created a config.json file containing this JSON:

As you can see, I'm not concerned with any properties of the works items. I've simply listed the language codes for many languages that primarily use the Latin character set. The creators.csv file is my spreadsheet with the 1325 item identifiers in a column named qid. It defines the set of items I'm interested in. After running the acquire_wikidata_metadata.py script, the creators_out.csv spreadsheet looked like this:

There are several things worth noting. In most cases, when the label is available in non-English languages, it's exactly the same as the English label. This confirms my assertion that it's probably fine to just re-use the "English" names as labels in the other languages. There are a couple exceptions. Buckminster Fuller has variation in his labels because "Buckminster" is apparently his middle name. So I'm going to mostly leave that row alone -- he's famous enough that he's represented in most languages anyway. The Haverford Painter's name isn't really a name. It's more of a description applied as a label and it does vary from language to language. I'll just delete that row since I have no idea how to translate "Haverford Painter" into most of the languages.

The other interesting thing is that most of the names are represented in Dutch already. The reason is that there is a bot called Enderoobot which, among other things, automatically adds English people name labels as Dutch labels (see this edit for example). There are only a few missing Dutch labels to fill in. So I definitely should not just copy the entire English column of labels and paste it into the Dutch column.

Since the rows of the CSV are in alphabetical order by Q ID, the top of the spreadsheet contains mostly newer items with Q IDs over 100 million. In the lower part of the sheet where the Q IDs of less than 100 million are located, there are a lot more well-known artists that have labels in more languages. It would take more time than I want to spend right now to scrutinize the existing labels to see if it's safe to paste over them. So for now I'll limit my copying and pasting to the top of the spreadsheet.

After pasting the English labels into all of the other columns and filling in the few missing Dutch labels, I'm ready to write the new labels to Wikidata. I needed to run the convert_json_to_metadata_schema.py script to generate the metadata description file that VanderBot needs to understand the new creators_out.csv spreadsheet I've just created and edited (see my second post if you don't know about that). I'm now ready to run VanderBot using the same command I used earlier.

Using this method, I was able to add approximately 3500 multilingual labels with only about 30 seconds of time to copy and paste columns in the spreadsheet and about 10 minutes for VanderBot to write the new labels to the API. I can't even imagine how long that would take to do manually.

One nice thing is that there is only one interaction with the API per item, regardless of the number of different languages of labels that are changed. Since most of the time that VanderBot takes to do the writing is actually just sleeping 1.25 seconds per item (to avoid exceeding the maximum writing rate for bots without a bot flag), it's important to bundle as many data items per API interaction as possible.

When I check one of the artist's pages, I see now that it has labels in many languages instead of only English.

Although it would be more labor-intensive, the same process could be used for adding labels in non-Latin character sets. A native speaker could simply go down the rows and type in the labels in Chinese characters, Cyrillic, Greek, Arabic, or any other non-Latin character set in an appropriate column and run the script to add those labels as well.

Adding multiple references to an item at once

Despite the importance of references to ensuring the reliability of Wikidata, many (most?) statements do not have them. That's understandable when humans create the statements, since including references is time consuming (although less so if you use some of the gadgets that are available to streamline the process, like currentDate and DuplicateReferences). For bots, it's inexcusable. Most bots are getting their data automatically from some data source and they know what that data source is, so there is no reason for them to not add references other than the laziness of their developers.

We can't fix other people's bad behavior, but we can fix their missing references with minimal work if we have an easy way to acquire the information.

Q44943965 is an article that was created using QuickStatements. Some of the data about the item was curated manually and those statements have references. But most of the bot-created statements don't have any references and I'm too lazy to add them manually. Luckily, the article has a DOI statement near the bottom, so all I need to do is to click on it to verify that the information exists for the statements with missing references. As a reference URL, I'm going to use the HTTPS form of the DOI, https://doi.org/10.3233/SW-150203, which a human can click on to see the evidence that supports the statement.

This publication was in the CSVs from the first example in this post, so prior to writing the references, I deleted the CSVs and used the acquire_wikidata_metadata.py script download a fresh copy of the data.

I highlighted the row to make it easier to see and pasted the DOI URL into the doi_ref1_referenceUrl column. I typed in todays date into the doi_ref1_retrieved_val column in the required format: 2021-03-17.

To create the references for the other statements, I just needed to copy the DOI URL into all of the columns whose names end in _ref1_referenceUrl and today's date into all of the columns that end in _ref1_retrieved_val.

Once I finished that, I saved the CSV and ran VanderBot (I already had the metadata description file from earlier work). I saved the output into a log file so that I could look at it later.

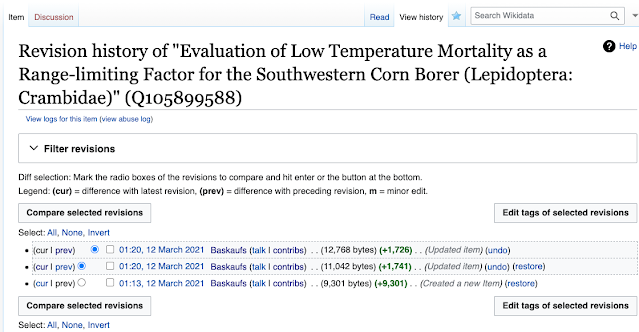

When VanderBot processes a CSV, it first writes any new items. It then runs a second check on the spreadsheet to find any items where statements already exist (indicated by the presence of a value in the _uuid column for that property), but where references have NOT been written (indicated by the absence of a _ref1_hash value). Scrolling through the log file, I saw that there was "no data to write" for any of the statements. In the "Writing references of existing claims" section (screenshot above), I saw the seven new references I created for Q44943965.

Checking the item page again, I see that all of the statements now have references!

This is more labor-intensive than making the label changes that I demonstrated in the previous example, but if all of the items in a spreadsheet were derived from the same source, then copying and pasting all the way down the _ref1_referenceURL and _ref1_retrieved_val columns would be really fast. In this case, it was not particularly fast, since I had to look up the DOI, then copy and paste the URL and date manually for each different item. However, since DOI data from CrossRef are machine-readable (via their API, see https://github.com/CrossRef/rest-api-doc), it won't be that hard to script the lookup in Python and have the script add all of the references to the CSV. I may write a post showing how to do that sometime in the future.

Conclusion

The script that downloads existing data from Wikidata into a CSV (acquire_wikidata_metadata.py) makes it possible to use the VanderBot API-writing script to improve certain kinds of information about items by simply copying multiple cells and pasting them elsewhere in the spreadsheet. Since CSVs are easily read and written by scripts, it is also possible to automate the addition of some kinds of data about existing items to the CSV (and eventually to Wikidata) by scripting.

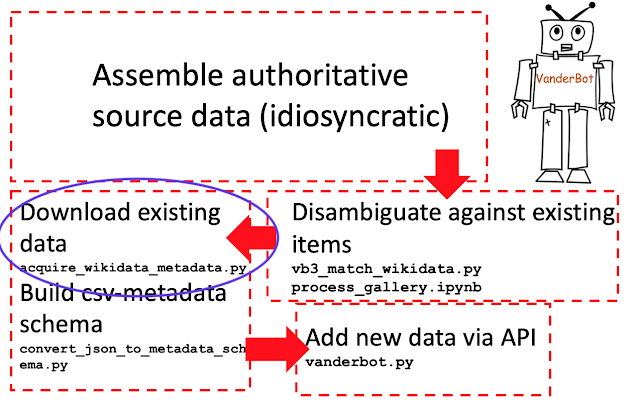

In future posts, I will show how to accomplish some of the more difficult aspects of managing your own data in Wikidata using spreadsheets, including automated data acquisition, disambiguation, and monitoring for changes over time.