...

"Grandpa Clayton said heartily, "Why, that's excellent for a start. This is your first one, isn't it?"

"Yes," Andy said uncomfortably. "I meant him to be a man, but he turned out like this."

"Well, that's all right," Grandpa Clayton said. "it takes all sorts of people to make a world, and I expect it's the same with robots."

Carol Ryrie Brink (1966) Andy Buckram's Tin Men.

What does a semantic client "know"?

In the first post of this series, I raised the question "What does it actually mean to say that we can 'learn' something using RDF?" This is a rather vague question for two reasons: what did I mean by "learn" and what did I mean by "RDF"? In this post I want to flesh out this question.Last October I forced myself to read the RDF Semantics document *. It was somewhat painful, but much of what I found written in technical language supported the ideas about RDF that I'd absorbed through osmosis over the past several years. Section 6 very succinctly addresses the question of what a semantic client can know:

Given a set of RDF graphs, there are various ways in which one can 'add' information to it. Any of the graphs may have some triples added to it; the set of graphs may be extended by extra graphs; or the vocabulary of the graph may be interpreted relative to a stronger notion of vocabulary entailment, i.e. with a larger set of semantic conditions understood to be imposed on the interpretations. All of these can be thought of as an addition of information, and may make more entailments hold than held before the change. All of these additions are monotonic, in the sense that entailments which hold before the addition of information, also hold after it.In the previous post, I noted that the basic "fact" unit in RDF is a triple. A graph is a set of triples, so a graph essentially represents a set of known facts. So the technical answer to the question "what does a semantic client know?" is: "the triples that comprise the graph it has assembled". This is a little anticlimactic if we were hoping that sentience was somehow going to arise spontaneously in our "intelligent agent", but I'm afraid that is about all that there is. If we accept that the RDF graph assembled by a client is what it "knows", then Section 6 answers the question "how does a semantic client learn?" If learning is the acquisition of knowledge, then "learning" for a semantic client is the addition of triples to its graph.

"Learning" by the addition of triples

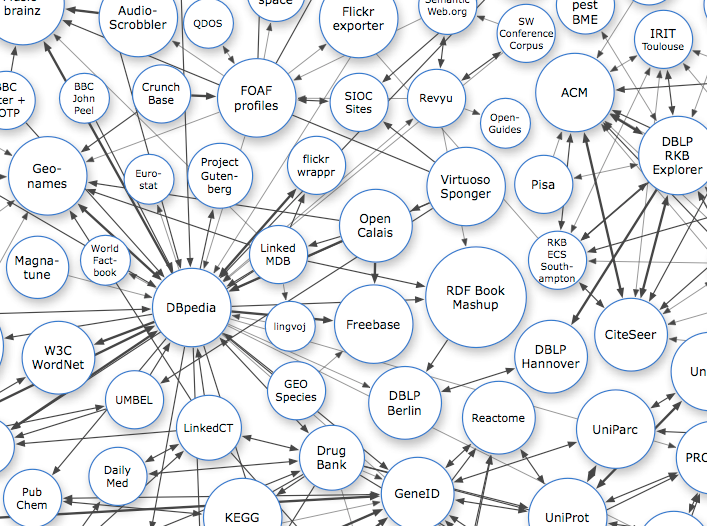

Linking Open (LOD) Data Project Cloud Diagram from http://linkeddata.org/ CC BY-SA

The most straightforward way for a semantic client to "learn" is to simply add triples to those already present in its graph. There are a number of ways this could happen. The human managing the client might feed triples directly into it from an in-house institutional database. A graph might be loaded in bulk from another data provider. Or the client might "follow its nose" and discover triples on its own by traversing the Semantic Web as envisioned by Tim Berners-Lee. In the Linked Data model, resources are identified by HTTP URIs which can be dereferenced to acquire an RDF document describing the resource.

"Learning through Discovery" is an exciting prospect for our precocious intelligent agent, and it would be tempting to turn it loose on the Internet to collect as many triples as possible. However, there is also a dark side to this kind of learning. RDF assumes that "Anyone can say Anything about Anything". In the language of the RDF Concepts document:

To facilitate operation at Internet scale, RDF is an open-world framework that allows anyone to make statements about any resource.

In general, it is not assumed that complete information about any resource is available. RDF does not prevent anyone from making assertions that are nonsensical or inconsistent with other statements, or the world as people see it. Designers of applications that use RDF should be aware of this and may design their applications to tolerate incomplete or inconsistent sources of information.So it is possible that our client will discover triples that are correct and useful (awesome!). However, it is also possible that it will discover triples that are incorrect due to carelessness, ignorance, or outright nefariousness. Another possibility that should be considered is that the client will encounter triples that are correct, but useless (a possibility that I'd like to explore further in a future post). One would like to believe that "bad triples" wouldn't be introduced into the Semantic Web out of malice, but given the existence of computer viruses and spam, it would probably be naive to think that. The likelihood that our client may discover "bad" triples introduces a social dimension to the problem of how it "learns": how do we program the client to know which data sources to trust?

"Learning" through entailment rules

The quote from Section 6 of the RDF Semantics document describes the following way of adding information to an RDF graph: "...the vocabulary of the graph may be interpreted relative to a stronger notion of vocabulary entailment, i.e. with a larger set of semantic conditions understood to be imposed on the interpretations." For example generic RDF can be extended by the rdfs-interpretation which satisfies additional semantic conditions, such as:

If <x,y> is in IEXT(I(rdfs:range)) and <u,v> is in IEXT(x) then v is in ICEXT(y)

The semantic conditions then establish various entailment rules. The OWL 2 Primer defines entailment as follows: "a set of statements A entails a statement a if in any state of affairs wherein all statements from A are true, also a is true."

If you are finding your head spinning by now point, an illustration may help. The semantic condition above leads to the following entailment rule:

Rule rdfs3:

If graph E contains {aaa rdfs:range XXX. uuu aaa vvv.} then add {vvv rdf:type XXX.}

A client that is programmed to extend RDF to the rdfs-interpretation can use this rule to generate an inferred triple that has never been explicitly stated.

Happy example!!!

My busybody semantic client has surfed the Semantic Web and discovered the FOAF vocabulary. My imaginary programming prowess has enabled the client to sort through the RDFa there and "learn" (add to its graph) the triple:

foaf:depiction rdfs:range foaf:Image.

(Turtle serialization, with foaf: and rdfs: representing their conventional namespaces). After some additional surfing, my client also discovers the following triple:

<http://viaf.org/viaf/9854560>

foaf:depiction <http://commons.wikimedia.org/wiki/File:Van_Gogh_Age_19.jpg>.

it can use Rule rdfs3 to infer that the two triples it has discovered entail a third triple:

<http://commons.wikimedia.org/wiki/File:Van_Gogh_Age_19.jpg> rdf:type foaf:Image.

My semantic client is so smart! It has "learned" (i.e. added a triple to its graph=added information=learned) that the resource identified by the IRI

http://commons.wikimedia.org/wiki/File:Van_Gogh_Age_19.jpg

is an image!!! This is just what I was hoping for and I am beginning to feel like I'm on the way to creating an "intelligent agent".

Sad example :-( :-( :-(

Flushed with enthusiasm and its first victory, my client discovers the following triple:

<urn:lsid:ubio.org:namebank:111731>

foaf:depiction <http://dbpedia.org/resource/Moby-Dick>.

I'm a bit unsure about this one. I know that the subject IRI identifies the scientific name for sperm whale. I also know that the object IRI identifies the book Moby Dick. The triple seems reasonable because the book Moby Dick does depict a sperm whale (sort of). So I let my client have a go at learning again. It applies entailment Rule rdfs3 and infers this triple:

<http://dbpedia.org/resource/Moby-Dick> rdf:type foaf:Image.

I am no longer feeling so good about how things are going with my intelligent agent. It has just "learned" that the book Moby Dick is an image. What has gone wrong?

What went wrong in the sad example?

The short answer to this question is: Nothing. My semantic client has made no mistakes - it correctly inferred a triple that was entailed once I accepted the RDFS interpretation of RDF. It now "knows" that the book Moby Dick is an image.

One could claim that the problem was with the triple:

<urn:lsid:ubio.org:namebank:111731>

foaf:depiction <http://dbpedia.org/resource/Moby-Dick>.

However, in RDF Anyone can say Anything about Anything. It is possible that the creator of that triple was careless and did not realize that use of foaf:depiction entailed that the object of the triple was an image. However, it is also possible that the creator of that triple DID understand the implications of using foaf:depiction and intended to extend the concept of "image" to include things that could be figuratively considered "images" (like books). Without knowing more about the provider of the triple and what the provider understood the FOAF vocabulary to mean, we cannot know if the usage was intentional or a mistake. As in the case of "learning through addition of triples", the dimension of trust is important here as well. We must be able to trust that the providers of triples we ingest are both honest in the information they expose and competent to use vocabulary terms in a manner consistent with their intended meaning.

Horrifying example

If we wanted to create the most powerful "intelligent agent" possible, then we might consider allowing our client to conduct reasoning based on the strongest possible vocabulary entailments. Returning one more time to Section 6 of the RDF Symantics document, this would result in a larger set of semantic conditions understood to be imposed on the interpretations, which can be thought of as an addition of information. Our client can "learn even more". The Web Ontology Language (OWL) provides terms loaded with powerful semantics (owl-interpretation) that can allow clients to infer triples in many useful ways. However, whenever an interpretation has stronger entailment, there are also more ways to create inconsistencies and unintended consequences.

If you have gotten this far in the post, let me suggest some reading that was very helpful to my thinking these issues and which was sometimes entertaining (if you have a warped sense of entertainment, as I do):

Aidan Hogan, Andreas Harth and Axel Polleres. Scalable Authoritative OWL Reasoning for the Web. International Journal on Semantic Web and Information Systems, 5(2), pages 49-90, April-June 2009. http://www.deri.ie/fileadmin/documents/DERI-TR-2009-04-21.pdf

Hogan et al. (2009) provide an interesting example of four triples containing terms from the RDFS and OWL vocabularies:

rdfs:subClassOf rdfs:subPropertyOf rdfs:Resource.

rdfs:subclassOf rdfs:subPropertyOf rdfs:subPropertyOf.

rdf:type rdfs:subPropertyOf rdfs:subClassOf.

rdfs:subClassOf rdf:type owl:SymmetricProperty.

If we allow our client to ingest these triples and then conduct naive reasoning based on rdfs- and owl-interpretations of RDF, the client will infer every possible combination of every unique IRI in its graph. A relatively small graph containing a thousand triples would result in the client inferring 1.6x10^8 meaningless triples. These four triples would effectively serve as a loaded bomb for a client that had no discretion about the source of its triples and the kinds of reasoning it conducted.

Hogan et al. (2009) also discusses the idea of "ontology hijacking" where statements are made about classes or properties in such a way that reasoning on those classes or properties is affected in a harmful or inflationary way. In their SAOR reasoner, they introduced sets of rules that determined the types of reasoning that their client would be allowed to conduct and the types of triples on which the reasoning could be conducted.

In this section, I am not saying that it is bad to use interpretations with stronger entailment. Rather, I hope I made the point that the programmer of a client must make careful decisions about the conditions under which the client would be allowed to generate entailed triples and incorporate them with other "facts" that they "know" (i.e. into their graph).

Summary

"RDF" can be interpreted under different vocabulary interpretations, ranging from fewer semantic conditions and weaker entailment, to more semantic conditions and stronger entailment. Information can be gained by a client if it ingests more triples, or if it conducts inferencing based on stronger entailment.

- Entailment rules do NOT enforce conditions.

- Entailment rules imply that other unstated triples exist.

- Inferred triples are true to the extent that the statements which entail them are also true. This introduces a requirement for an element of trust.

- A client is not required to apply all possible entailment rules.

- A client is not required to to apply rules to any particular set of triples.

---------

* In February, the RDF 1.1 Semantics document superseded the RDF (1.0) Semantics document. It broadens the earlier document in a number of ways such as supporting IRIs and clarifying the types of literals, but otherwise most of what is stated in the 1.0 document remains true.