Background

Last October, I wrote a post called Guid-O-Matic Goes to China. That post described an application I wrote in Xquery to generate RDF in various serializations from simple CSV files. Those of you who know me from TDWG are probably shaking your heads and wondering "Why in the world is he using Xquery to do this? Who uses Xquery?"The answer to the second question is "Digital Humanists". There is an active Digital Humanities effort at Vanderbilt, and recently Vanderbilt received funding from the Andrew W. Mellon Foundation to open a Center for Digital Humanities. I've enjoyed hanging out with the digital humanists and they form a significant component of our Semantic Web Working Group. Digital Humanists also form a significant component of the Xquery Working Group at Vanderbilt. Last year, I attended that group for most of the year, and that was how I learned enough Xquery to write the application.

That brings me to the first question (Why is he using Xquery?). In my first post on Guid-O-Matic, I mentioned that one reason why I wanted to write the application was because BaseX (a freely available XML database and Xquery processor) included a web application component that allows Xquery modules to support a BaseX RESTXQ web application service. After I wrote Guid-O-Matic, I played around with BaseX RESTXQ in an attempt to build a web service that would support content negotiation as required for Linked Data best practices. However, the BaseX RESTXQ module had a bug that prevented using it to perform content negotiation as described in its documentation. For a while I hoped that the bug would get fixed, but it became clear that content negotiation was not a feature that was used frequently enough for the developers to take the time to fix the bug. In December, I sat down with Cliff Anderson, Vanderbilt's Xquery guru, and he helped me come up with strategy for a workaround for the bug. Until recently, I was too busy to pick up the project again, but last week I was finally able to finish writing the functions in the module to run the web server.

How does it work?

Here is the big picture of how the Guid-O-Matic web service works:A web-based client (browser or Linked Data client) uses HTTP to communicate with the BaseX web service. The web service is an Xquery module whose functions process the URIs sent from the client via HTTP GET requests. It uses the Accept: header to decide what kind of serialization the client wants, then uses a 303 redirect to tell the client which specific URI to use to request a specific representation in that serialization. The client then sends a GET request for the specific representation it wants. The web service calls Guid-O-Matic Xquery functions that use data from the XML database to build the requested documents. Depending on the representation-specific URI, it serializes the RDF as either XML, Turtle, or JSON-LD. (Currently, there is only a stub for generating HTML, since the human-readable representation would be idiosyncratic depending on the installation.) In the previously described versions of Guid-O-Matic, the data were retrieved from CSV files. In this version, CSV files are still used to generate the XML files using a separate script. But those XML files are then loaded into BaseX's built-in XML database, which is the actual data source used by the scripts called by the web service. In theory, one could build and maintain the XML files independently without constructing them from CSVs. One could also generate the CSV files from some other source as long as they were in the form that Guid-O-Matic understands.

Trying it out

You can try the system out for yourself to see how it works by following the following steps.- Download and install BaseX. BaseX is available for download at http://basex.org/. It's free and platform independent. I won't go into the installation details because it's a pretty easy install.

- Clone the Guid-O-Matic GitHub repo. It's available at https://github.com/baskaufs/guid-o-matic.

- Load the XML files into a BaseX database. The easiest way to do this is probably to run the BaseX GUI. On Windows, just double-click on the icon on the desktop. From the Database menu, select "New..." Browse to the place on your hard drive where you cloned the Guid-O-Matic repo, then Open the "xml-for-database" folder. Name the database "tang-song" (it includes the data described in the Guid-O-Matic Goes to China post). Select the "Parse files in archives" option. I think the rest of the options can be left at their defaults. Click OK. You can close the BaseX GUI.

- Copy the restxq module into the webinf directory of BaseX. This step requires you to know where BaseX was installed on your hard drive. Within the BaseX installation folder, there should be a subfolder called "webapp". Within this folder, there should be a file with the extension ".xqm", probably named something like "restxq.xqm". In order for the web app to work, you either need to delete this file, or change its extension from ".xqm" to something else like ".bak" if you think there is a possibility that you will want to look at it in the future. Within the cloned Guid-O-Matic repo find the file "restxq-db.xqm" and copy it to the webapp folder. This file contains the script that runs the server. You can open it within the BaseX GUI or any text editor if you want to try hacking it.

- Start the server. Open a command prompt/command window. On my Windows computer, I can just type basexhttp.bat to launch the batch file that starts the server. (I don't think that I had to add the BaseX/bin/ folder to my path statement, but if you get a File Not Found error, you might have to navigate to that directory first to get the batch file to run.) For non-Windows computers there should be another script named basexhttp that you can run by an appropriate method for your OS. See http://docs.basex.org/wiki/Startup for details. When you are ready to shut down the server, you can do it gracefully from the command prompt by pressing Ctrl-C. By default, the server runs on port 8984 and that's what we will use in the test examples. If you actually want to run this as a real web server, you'll probably have to change it to a different port (like port 80). See the BaseX documentation for more on this.

- Send an HTTP GET request to the server. There are a number of ways to cause client software to interact with the server (lots more on this later). The easiest way is to open any web browser and enter http://localhost:8984/Lingyansi in the URL box. If the server is working, it should redirect to the URL http://localhost:8984/Lingyansi.htm and display a placeholder web page.

If you have successfully gotten the placeholder web page to display, you can carry out the additional tests that I'll describe in the following sections.

|

| Image from the W3C Interest Group Note https://www.w3.org/TR/cooluris/ © 2008 W3C |

What's going on?

The goal of the Guid-O-Matic web service is to implement content negotiation in a manner consistent with the Linked Data practice described in section 4.3 the W3C Cool URIs for the Semantic Web document. The purpose of this best practice is to allow users to discover information about things that are denoted by URIs, but that are not documents that can be delivered via the Web. For example, we could use the URI http://lod.vanderbilt.edu/historyart/site/Lingyansi to denote the Lingyan Temple in China. If we put that URI into a web browser, it is not realistic to expect the Internet to deliver the Lingyan Temple to our desktop. According to the convention established in the resolution to the httpRange-14 question, when a client makes an HTTP GET request to dereference the URI of a non-information resource (like a temple), an appropriate response from the server is to provide an HTTP 303 (See Other) response code that redirects the client to another URI that denotes an information resource (i.e. a document) that is about the non-information resource. The user can indicate the desired kind of document by providing an HTTP Accept: header that provides the media type they would prefer. So when a client makes a GET request for http://lod.vanderbilt.edu/historyart/site/Lingyansi, along with a request header of Accept: text/html it is appropriate for the server to respond with a 303 redirect to the URI http://lod.vanderbilt.edu/historyart/site/Lingyansi.htm, which denotes a document (web page) about the Linyansi Temple.

There is no particular convention about the form of the URIs used to represent the non-information and information resources, although it is considered to be a poor practice to include file extensions in the URIs of non-information resources. You can see one pattern in the diagram above. Guid-O-Matic follows the following convention. If a URI is extensionless, it is assumed to represent a non-information resource. Each non-information resource included in the database can be described by a number of representations, i.e., documents having differing media types. The URIs denoting those documents are formed by appending a file extension to the base URI of the non-information resource. The extension used is one that is standard for that media type. Many other patterns are possible, but using a pattern other than this one would require different programming than what is shown in this post.

The following media types are currently supported by Guid-O-Matic and can be requested from the web service:

Extension Media Type

--------- -----------

.ttl text/turtle

.rdf application/rdf+xml

.json application/ld+json or application/json

.htm text/html

The first three media types are serializations of RDF and would be requested by Linked Data clients (machines), while the fourth media type is a human-readable representation that would typically be requested by a web browser. As the web service is currently programmed, any media type requested other than the five listed above results in redirection to the URI for the HTML file.

There are currently two hacks in the web service code that recognize two special URIs. If the part of the URI after the domain name ends in "/header", the web server simply echos back to the client a report of the Accept: header that was sent to it as part of the GET request. You can try this by putting http://localhost:8984/header in the URL box of your browser. For Chrome, here's the response I got:

text/html application/xhtml+xml application/xml;q=0.9 image/webp */*;q=0.8

As you can see, the value of the Accept: request header generated by the browser is more complicated than the simple "text/html" that you might expect [1].

In production, one would probably want to delete the handler for this URI, since it's possible that one might have a resource in the database whose local name is "header" (although probably not for Chinese temples!). Alternatively, one could change the URI pattern to something like /utility/header that wouldn't collide with the pattern used for resources in the database.

The other hack is to allow users to request an RDF dump of the entire dataset. A dump is requested using a URI ending in /dump along with a request header for one of the RDF media types. If the header contains "text/html" (a browser), Turtle is returned. Otherwise, the dump is in the requested media type. The Chinese temple dataset is small enough that it is reasonable to request a dump of the entire dataset, but for larger datasets where a dump might tie up the server, it might be desirable to delete or comment out the code for this URI pattern.

Web server code

Here is the code for the main handler function:

declare

%rest:path("/{$full-local-id}")

%rest:header-param("Accept","{$acceptHeader}")

function page:content-negotiation($acceptHeader,$full-local-id)

{

if (contains($full-local-id,"."))

then page:handle-repesentation($acceptHeader,$full-local-id)

else page:see-also($acceptHeader,$full-local-id)

};

The %rest:path annotation performs the pattern matching on the requested URI. It matches any local name that follows a single slash, and assigns that local name to the variable $full-local-id. The %rest:header-param annotation assigns the value of the Accept: request header to the variable $acceptHeader. These two variables are passed into the page:content-negotiation function.

The function then chooses between two actions depending on whether the local name of the URI contains a period (".") or not. If it does, then the server knows that the client wants a document about the resource in a particular serialization (a representation) and it calls the page:handle-repesentation function to generate the document. If the local name doesn't contain a period, then the function calls the page:see-also function to generate the 303 redirect.

Here's the function that generates the redirect:

declare function page:see-also($acceptHeader,$full-local-id)

{

if(serialize:find-db($full-local-id)) (: check whether the resource is in the database :)

then

let $extension := page:determine-extension($acceptHeader)

return

<rest:response>

<http:response status="303">

<http:header name="location" value="{ concat($full-local-id,".",$extension) }"/>

</http:response>

</rest:response>

else

page:not-found() (: respond with 404 if not in database :)

};

The page:see-also function first makes sure that metadata about the requested resource actually exists in the database by calling the serialize:find-db function that is part of the Guid-O-Matic module. The serialize:find-db function returns a value of boolean true if metadata about the identified resource exist. If value generated is not true, the page:see-also function calls a function that generates a 404 "Not found" response code. Otherwise, it uses the requested media type to determine the file extension to append to the requested URI (the URI of the non-information resource). The function then generates an XML blob that signals to the server that it should send back to the client a 303 redirect to the new URI that it constructed (the URI of the document about the requested resource).

Here's the function that initiates the generation of the document in a particular serialization about the resource:

declare function page:handle-repesentation($acceptHeader,$full-local-id)

{

let $local-id := substring-before($full-local-id,".")

return

if(serialize:find-db($local-id)) (: check whether the resource is in the database :)

then

let $extension := substring-after($full-local-id,".")

(: When a specific file extension is requested, override the requested content type. :)

let $response-media-type := page:determine-media-type($extension)

let $flag := page:determine-type-flag($extension)

return page:return-representation($response-media-type,$local-id,$flag)

else

page:not-found() (: respond with 404 if not in database :)

};

The function begins by parsing out the identifier part from the local name. It checks to make sure that metadata about the identified resource exist in the database - if not, it generates a 404. (It's necessary to do the check again in this function, because clients might request the document directly without going through the 303 redirect process.) If the metadata exist, the function parses out the extension part from the local name. The extension is used to determine the media type of the representation, which determines both the Content-Type: response header and a flag used to signal to Guid-O-Matic the desired serialization. In this function, the server ignores the media type value of the Accept: request header. Because the requested document has a particular media type, that type will be reported accurately regardless of what the client requests. This behavior is useful in the case where a human wants to use a browser to look at a document that's a serialization of RDF. If the Accept: header were respected, the human user would see only the web page about the resource rather than the desired RDF document. Finally, the necessary variables are passed to the page:return-representation function that handles the generation of the document.

Here is the code for the page:return-representation function:

declare function page:return-representation($response-media-type,$local-id,$flag)

{

if(serialize:find-db($local-id))

then

(

<rest:response>

<output:serialization-parameters>

<output:media-type value='{$response-media-type}'/>

</output:serialization-parameters>

</rest:response>,

if ($flag = "html")

then page:handle-html($local-id)

else serialize:main-db($local-id,$flag,"single","false")

)

};

The function generates a sequence of two items. The first is an XML blob that signals to the server that it should generate a Content-Type: response header with a media type appropriate for the document that is being delivered. The second is the response body, which is generated by one of two functions. The page:handle-html function for generating the web page is a placeholder function, and in production there would be a call to a real function in a different module that used data from the XML database to generate appropriate content for the described resource. The serialize:main-db function is the core function of Guid-O-Matic that builds a document from the database in the serialization indicated by the $flag variable. The purpose of Guid-O-Matic was previously described in Guid-O-Matic Goes to China, so at this point the serialize:main-db function can be considered a black box. For those interested in the gory details of generating the serializations, look at the code in the serialize.xqm module in the Guid-O-Matic repo.

The entire restxq-db.xqm web service module can be viewed here.

Trying out the server

To try out the web server, you need to have a client installed on your computer that is capable of making HTTP requests with particular Accept: request headers. An application commonly used for this purpose is curl. I have to confess that I'm not enough of a computer geek to enjoy figuring out the proper command line options to make it work for me. Nevertheless, it's simple and free. If you have installed curl on your computer, you can use it to test the server. The basic curl command I'll use is

curl -v -H "Accept:text/turtle" http://localhost:8984/Lingyansi

The -v option makes curl verbose, i.e. to make it show the header data as curl does stuff. The -H option is used to send a request header - in the example, the media type for Turtle is requested. The last part of the command is the URI to be used in the HTTP request, which is a GET request by default. In this example, the HTTP GET is made to a URI for the local webserver running on port 8984 (i.e. where the BaseX server runs by default).

Here's what happens when the curl command is given to make a request to the web service application:

The lines starting with ">" show the communication to the server and the lines starting with "<" show the communication coming from the server. You can see that the server has responded in the desired manner: the client requested the file /Lingyansi in Turtle serialization, and the server responded with a 303 redirect to Lingyansi.ttl. Following the server's redirection suggestion, I can issue the command

curl -v -H "Accept:text/turtle" http://localhost:8984/Lingyansi.ttl

and I get this response:

This time I get a response code of 200 (OK) and the document is sent as the body of the response. When GETting the turtle file, the Accept: header is ignored by the web server, and can be anything. Because of the .ttl file extension, the Content-type: response header will always be text/turtle.

If the -L option is added to the curl command, curl will automatically re-issue the command to the new URI specified by the redirect:

curl -v -L -H "Accept:text/turtle" http://localhost:8984/Lingyansi

Here's what the interaction of curl with the server looks like when the -L option is used:

Notice that for the second GET, curl reuses the connection with the server that it left open after the first GET. One of the criticisms of the 303 redirect solution to the httpRange-14 controversy is that it is inefficient - two GET calls to the server are required to retrieve metadata about a single resource.

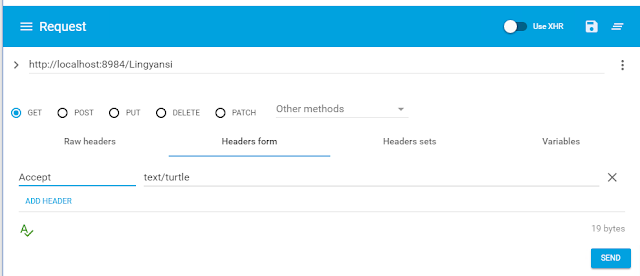

If you aren't into painful command line applications, there are several GUI options for sending HTTP requests. One application that I use is Advanced Rest Client (ARC), a Chrome plugin (unfortunately available for Windows only). Here's what the ARC GUI looks like:

The URI goes in the box at the top and GET is selected using the appropriate radio button. If you select Headers form, a dropdown list of possible request headers appears when you start typing and you can select Accept. In this example I've given a value of text/turtle, but you can also test the other values recognized by the server script: text/html, application/rdf+xml, application/json, and application/ld+json.

When you click SEND, the response is somewhat inscrutably "404 Not found". I'm not sure exactly what ARC is doing here - clearly something more than a single HTTP GET. However, if you click the DETAILS dropdown, you have the option of selecting "Redirects". Then you see that the server issued a 303 See Other redirect to Lingyansi.ttl:

If you change the URI to http://localhost:8984/Lingyansi.ttl, you'll get this response:

This time no redirect, a HTTP response code of 200 (OK), a Content-Type: text/turtle response header, and the Turtle document as the body.

There is a third option for a client to send HTTP requests: Postman. It is also free, and available for other platforms besides Windows. It has a GUI interface that is similar to Advanced Rest Client. However, for whatever reason, Postman always behaves like curl with the -L option. That is, it always automatically responds by sending you the ultimate representation without showing you the intervening 303 redirect. There might be some way to make it show you the complete process that is going on, but I haven't figured out how to do that yet.

If you are using Chrome as your browser, you can go to the dot, dot, dot dropdown in the upper right corner of the browser and select "More tools", then "Developer tools". That will open a pane on the right side of the browser to show you what's going on "under the hood". Make sure that the Network tab is selected, then load http://localhost:8984/Lingyansi . This is what you'll see:

The Network pane on the right shows that the browser first tried to retrieve Lingyansi, but received a 303 redirect. It then successfully retrieved Lingyansi.htm, which it then rendered as a web page in the pane on the left. Notice that after the redirect, the browser replaced the URI that was typed in with the new URI of the page that it actually loaded.

Who cares?

If after all of this long explanation and technical gobbledygook you are left wondering why you should care about this, you are in good company. Most people couldn't care less about 303 redirects.

As someone who is trying to believe in Linked Data, I'm trying to care about 303 redirects. According to the core principles of Linked Data elaborated by Tim Berners-Lee in 2006, a machine client should be able to "follow its nose" so that it can "look up" information about resources that it learns about through links from somewhere else. 303 redirects facilitate this kind of discovery by providing a mechanism for a machine client to tell the server what kind of machine-readable metadata it wants (and that it wants machine-readable metadata and not a human-readable web page!).

Despite the ten+ years that the 303 redirect solution has existed, there are relatively few actual datasets that properly implement the solution. Why?

I don't control the server that hosts my Bioimages website and I spent several years trying to get anybody from IT Services at Vanderbilt to pay attention long enough to explain what kind of behavior I wanted from the server, and why. In the end, I did get some sort of content negotiation. If you perform an HTTP GET request for a URI like http://bioimages.vanderbilt.edu/ind-baskauf/40477 and include an Accept: application/rdf+xml header, the server responds by sending you http://bioimages.vanderbilt.edu/ind-baskauf/40477.rdf (an RDF/XML representation). However, it just sends the file with a 200 OK response code and doesn't do any kind of redirection (although the correct Content-Type is reported in the response). The behavior is similar in a browser. Sending a GET request for http://bioimages.vanderbilt.edu/ind-baskauf/40477 results in the server sending http://bioimages.vanderbilt.edu/ind-baskauf/40477.htm, but since the response code is 200, the browser doesn't replace the URI entered in the box with the URI of the file that it actually delivers. It seems like this solution should be OK, even though it doesn't involve a 303 redirect.

Unfortunately, from a Linked Data point of view, at the Bioimages server there are always two URIs that denote the same information resource, and neither of them can be inferred to be a non-information resource by virtue of a 303 response, as suggested by the httpRange-14 resolution. On a more practical level, users end up bookmarking two different URIs for the same page (since when content negotiation takes place, the browser doesn't change the URI to the one ending with .htm) and search engines index the same page under two different URIs, resulting in duplicate search results and potentially lower page rankings.

Another circumstance where failing to follow the Cool URIs recommendation caused a problem is when I tried to use the CETAF Specimen URI Tester on Bioimages URIs. The tester was created as part of an initiative by the Information Science and

Technology Commission (ISTC) of the Consortium of European Taxonomic Facilities (CETAF). When their URI tester is run on a "cool" URI like http://data.rbge.org.uk/herb/E00421509, the URI is considered to pass the CETAF tests for Level 3 implementation (redirect and return of RDF). However, a Bioimages URI like http://bioimages.vanderbilt.edu/ind-baskauf/40477 fails the second test of the suite because there is no 303 redirect, even though the URI returns RDF when the media type application/rdf+xml is requested. Bummer. Given the number of cases where RDF can actually be retrieved from URIs that don't use 303 redirects (including all RDF serialized as RDFa), it probably would be best not to build a tester that relied solely on 303 redirects. But until the W3C changes its mind about the httpRange-14 decision, a 303 redirect is the kosher way to find out from a server that a URI represents a non-information resource.

So I guess the answer to the question "Who cares?" is "people who care about Linked Data and the Semantic Web". The problem is that there just aren't that many people in that category, and even fewer who also care enough to implement the 303 redirect solution. Then there are the people who believe in Linked Data, but were unhappy about the httpRange-14 resolution and don't follow it out of spite. And there are also the people who believe in Linked Data, but don't believe in RDF (i.e. provide JSON-LD or microformat metadata directly as part of HTML).

A potentially important thing

Now that I've spent time haranguing about the hassles associated with getting 303 redirects to work, I'll mention a reason why I still think the effort might be worth it.

The RDF produced by Guid-O-Matic pretends that eventually the application will be deployed on a server that uses a base URI of http://lod.vanderbilt.edu/historyart/site/ . (Eventually there will be some real URIs minted for the Chinese temples, but they won't be the ones used in these examples). So if we pretend that at some point in the future the Guid-O-Matic web service were deployed on the web (via port 80 instead of port 8984), an HTTP GET request could be made to http://lod.vanderbilt.edu/historyart/site/Lingyansi instead of http://localhost:8984/Lingyansi and the server script would respond with the documents shown in the examples.

If you look carefully at the Turtle that the Guid-O-Matic server script produces for the Lingyan Temple, you'll see these RDF triples (among others):

<http://lod.vanderbilt.edu/historyart/site/Lingyansi>

rdf:type schema:Place;

rdfs:label "Lingyan Temple"@en;

a geo:SpatialThing.

<http://lod.vanderbilt.edu/historyart/site/Lingyansi.ttl>

dc:format "text/turtle";

dc:creator "Vanderbilt Department of History of Art";

dcterms:references <http://lod.vanderbilt.edu/historyart/site/Lingyansi>;

dcterms:modified "2016-10-19T13:46:00-05:00"^^xsd:dateTime;

a foaf:Document.

You can see that maintaining the distinction between the version of the URI with the .ttl extension and the URI without the extension is important. The URI without the extension denotes a place and a spatial thing labeled "Lingyan Temple". It was not created by the Vanderbilt Department of History of Art, it is not in RDF/Turtle format, nor was it last modified on October 19, 2016. Those latter properties belong to the document that describes the temple. The document about the temple is linked to the temple itself by the property dcterms:references.

Because maintaining the distinction between a URI denoting a non-information resource (a temple) and a URI that denotes an information resource (a document about a temple) is important, it is a good thing if you don't get the same response from the server when you try to dereference the two different URIs. A 303 redirect is a way to clearly maintain the distinction.

Being clear about the distinction between resources and metadata about resources has very practical implications. I recently had a conversation with somebody at the Global Biodiversity Information Facility (GBIF) about the licensing for Bioimages. (Bioimages is a GBIF contributor.) I asked whether he meant the licensing for images in the Bioimages website, or the licensing for the metadata about images in the Bioimages dataset. The images are available with a variety of licenses ranging from CC0 to CC BY-NC-SA, but the metadata are all available under a CC0 license. The images and the metadata about images are two different things, but the current GBIF system (based on CSV files, not RDF) doesn't allow for making this distinction on the provider level. In the case of museum specimens that can't be delivered via the Internet or organism observations that aren't associated with a particular form of deliverable physical or electronic evidence, the distinction doesn't matter much because we can assume that a specified license applies to the metadata. But for occurrences documented by images, the distinction is very important.

What I've left out

This post dwells on the gory details of the operation of the Guid-O-Matic server script, and tells you how to load outdated XML data files about Chinese temples, but doesn't talk about how you could actually make the web service work with your own data. I may write about that in the future, but for now you can go to this page of instructions for details of how to set up the CSV files that are the ultimate source of the generated RDF. The value in the baseIriColumn in the constants.csv file needs to be changed to the path of the directory where you want the database XML files to end up. After the CSV files are created and have replaced the Chinese temple CSV files in the Guid-O-Matic repo, you need to load the file load-database.xq from the Guid-O-Matic repo into the BaseX GUI. When you click on the run button (green triangle) of the BaseX GUI, the necessary XML files will be generated in the folder you specified. The likelihood of success in generating the XML files is higher on a Windows computer because I haven't tested the script on Mac or Linux, and there may be file path issues that I still haven't figured out on those operating systems.

The other thing that you should know if you want to hack the code is that the restxq-db.xqm imports the Guid-O-Matic modules that are necessary to generate the response body RDF serializations from my GitHub site on the web. That means that if you want to hack the functions that actually generate the RDF (located in the module serialize.xqm), you'll need to change the module references in the prologue of the restxq-db.xqm module (line 6) so that they refer to files on your computer. Instead of

import module namespace serialize = 'http://bioimages.vanderbilt.edu/xqm/serialize' at 'https://raw.githubusercontent.com/baskaufs/guid-o-matic/master/serialize.xqm';

you'll need to use

import module namespace serialize = 'http://bioimages.vanderbilt.edu/xqm/serialize' at '[file path]';

where [file path] is the path to the file in Guid-O-Matic repo on your local computer. On my computer, it's in the download location I specified for GitHub repos, c:/github/guid-o-matic/serialize.xqm . It will probably be somewhere else on your computer. Once you've made the change and saved the new version of restxq-db.xqm, you can hack the functions in serialize.xqm and the changes will take affect in the documents sent from the server.

Note

[1] The actual request header has commas not shown here. But BaseX reads the comma separated values as parts of a sequence, and when it reports the sequence back, it omits the commas.

I truly like to reading your post. Thank you so much for taking the time to share such a nice information. I'll definitely add this great post in my article section.

ReplyDeleteRead More: Best Blockchain Development Company in jaipur

Best iPad App Development Company in jaipur