This is the third part of a series of posts intended to help you manage and upload data to Wikidata using spreadsheets. You probably won't get as much out of this post if you haven't already done the do-it-yourself exercises in the first two posts, but since this is a more general topic, you might still find it useful even if you haven't read the earlier ones.

Determining scope

The target audience of this post is people or groups who have particular defined datasets that they would like to upload and "manage" on Wikidata. I put "manage" in quotes because no one can absolutely manage any data on Wikidata, since by definition it is a knowledge graph that anyone can edit. So no matter how much we care about "our" data, once we put it into Wikidata, we need to be at peace with the fact that others may edit "our" items.

There is a good chance that the data we are interested in "managing" may be some kind of data about which we have special knowledge. For example, if we are part of a museum, gallery, or library, we may have items in our collection that are worth describing in Wikidata. It is unlikely that others will have better information than we do about things like accession numbers and license information. I'm going to refer to this kind of information as "authoritative" data -- data that we probably know more about than other Wikidata users. There may be other data that we are very interested in tracking, but about which we may have no more information than anyone else.

In both of these situations, we have a vested interest in monitoring additions and changes made by others outside our group or organization. In the case of our "authoritative" data, we may want to be on the lookout for vandalism that needs to be reverted. As we track other non-authoritative data, we may discover useful information that's effectively crowd-sourced and available without cost (time or financial) to us.

There will also be other statements that involve properties that we aren't interested in tracking. That doesn't mean this other information is useless -- it may just not be practical for us to track it since we can't really contribute to it nor gain much benefit from it.

So an important part of planning a project to upload and manage data in Wikidata is determining the scope of statements you plan to monitor. This is true regardless of how you are doing that managing, but in the case of using CSV spreadsheets, the defined scope will determine what column headers will be present in the spreadsheet. So prior to moving forward with using spreadsheets to write data to Wikidata, we need to decide what properties, qualifiers, and references we plan to document in those spreadsheets.

Defining a group of items of interest

The first thing we need to decide is what kind of items are we interested in. There may be an obvious target item type: works in a gallery, specimens in a museum, articles published by researchers in an institution, etc. There will also often be secondary item types associated with the primary one: artists associated with gallery works, collectors with specimens, authors and journals with articles, for example. After determining the primary and secondary item types, the next step is to figure out the value of P31 (instance of) goes with each type of item of interest. In some cases, this might be obvious (Q5 = human for authors, for example). In other cases it may not be so clear. Is a book Q571 (book) or is it Q3331189 (version, edition, or translation)? The best answer to this question is probably "what are other people using for items similar to mine?" We'll talk about some tools for figuring that out later in the post.

There are two useful ways to define a group of related items. The simplest is enumeration: creating a list of Q IDs of items that are similar. Less straightforward but more powerful is to define a graph pattern that can be used in SPARQL to designate the group. We are not going to go off the deep end on SPARQL in this post, but it plays such an important role in using Wikidata that we need to talk about it a little bit.

Wikidata is a knowledge graph, which means that its items are linked together by the statements that connect them. Thus the links involve the properties that form the statements. The simplest connection between two statements involves a single statement using a single property. For example, if we are interested in works in the Vanderbilt Fine Arts Gallery, we can define that group of items by stating that a work is in the collection (P195) of the Vanderbilt Fine Arts Gallery (Q18563658). We can abbreviate this relationship by the shorthand:

?item wdt:P195 wd:Q18563658.

The ?item means that the item is the thing we want to know, and the other two parts lay out how the item is related to the gallery. This shorthand is the simplest kind of graph pattern that we can create to define a group of items.

We can use this graph pattern to get a list of the names of works in the gallery using the Wikidata Query Service. Click on this link and it will take you to the Query service with the appropriate query filled in. If you look at the query in the upper right, you'll see our graph pattern stuck between a pair of curly brackets. (The other line is a sort of "magic" line that produces English labels for items that are found.) If you are wondering how you might have known how to set up a query like this, you can drop down the Examples list and select the first query: "Cats". My query is just a hack of that one where I substituted my graph pattern for the one that defines cats (P31=instance of, Q146=house cat).

We can narrow down the scope of our group if we add another requirement to the graph pattern. For example, if we want our group of items to include only paintings that are in the Vanderbilt gallery, we can add another statement to the graph pattern: the item must also be an instance of (P31) a painting (Q3305213).

?item wdt:P31 wd:Q3305213.

The query using both restrictions is here.

These kinds of graph patterns are used all the time in Wikidata, sometimes when you don't even know it. If you visit the Vanderbilt Fine Arts Gallery WikiProject paintings page and look just below the star, you'll see that the graph pattern that we just defined is actually what generates that page. We will use such patterns later on to investigate property use by groups of items that are defined by graph patterns.

What properties are used with different kinds of items?

Recoin (Relative Completeness Indicator)

The simplest way to see what kind of properties tend to be used with certain kinds of items is to look at the page of an item of that kind and see what properties are there. That isn't a very systematic approach, but there is a gadget called Recoin that can make our investigation more robust. Recoin can be installed by clicking on the Preferences link at the top of any Wikidata page, then selecting the Gadgets tab. Check the box for Recoin, then click Save.

After you enable Recoin, you can click the Recoin link just below the item description and a list will drop down showing the fraction of items having various properties for all items having the same

P31 value. The example above shows values for instances of "art museum". Of course, this list shows properties that are missing for that page, so you would need to find a page with most properties missing to get a more full list. If you can create a new item having only a

P31 value, you will be able to get the complete list.

Wikidata:WikiProjects

A more systematic approach is to look for a WikiProject that is interested in the same kind of items as you. The

list of WikiProjects is somewhat intimidating, but if you succeed in finding the right project, it will often contain best-practices guidelines for describing certain types of items. For example, if you expand Cultural WikiProjects, then GLAM (galleries, libraries, archives, and museums) WikiProjects, you will see one called "Sum of all paintings". They have a list of

recommendations for how to describe paintings. You can find similar lists in other areas and if you are lucky, you will find a list of extensive data model guidelines, such as the Stanford Libraries's data

models for academia.

A small amount of time spent searching here will pay large dividends later if you start by using the consensus properties adopted by the community in which you are working. The items you put into Wikidata will be much more likely to be found and linked to by others if you describe them using the same model as is used with other items of the same type.

Determining what properties are used "in the wild"

If you find a WikiProject related to your type of interest, you will probably have a good idea of the properties that group says you should be using for statements about that type of item. However, you might discover that in actuality some of those properties are not really used much. That could be the case if the values are not easily available or if it's to labor-intensive to disambiguate available string values with item values in Wikidata. So it is pretty useful to know what properties are actually being used by items similar to the ones you are interested in creating/editing.

I have written a Python script,

count_entities.py, that you can use to determine what properties have been used to describe a group of related items and the number of items that have used each property. The script details are described

here. Before using the script with your own set of items, you will need to define your category of items using one of the two methods I described earlier. But for testing purposes, you can try running the script using the default built-in group: works in the Vanderbilt University Fine Arts Gallery.

To run the script, you need the following:

- Python 3 installed on your computer with the ability to run it at the command line.

- The requests module installed using PIP, Conda, or some other package manager.

- a plain text editor if you want to define the group by SPARQL graph pattern. You can use the built-in text editors TextEdit on Mac or Notepad on Windows.

- a spreadsheet program to open CSV files. LibreOffice Calc is recommended.

- knowledge of how to change directories and run a Python script from your computer's console (Terminal on Mac, Command Line on Windows).

You do NOT need to know how to code in Python. If you are uncertain about any of these requirements, please read the first post in this series, which includes a lot of hand-holding and additional information about them.

To run the script, go to the

script's page on GitHub and right click on the

Raw button. Select

Save Link As... and save the script in a directory you can easily navigate to using your console. The script will general CSV files as output, so it is best to put the script in a relatively empty directory so you can find the files that are created.

To test the script, go to your console and navigate to the directory where you saved the script. Enter

python count_entities.py

(or python3 if your installation requires that). The script will create a file called properties_summary.csv, which you can open using your spreadsheet program.

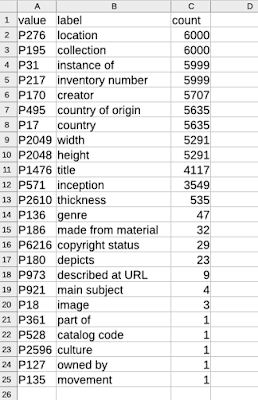

The table shows all of the properties used to make statements about items in the gallery and the number of items that use each property. Although there are (currently) 6000 items in the group, they use properties fairly consistently, so there aren't that many properties on the list. Other groups may have much longer lists. But often there will be a very long tail of properties used only once or a few times.

Unless you want to keep investigating the Vanderbilt Fine Arts Gallery items, you must define your group using one of the two options described below:

--csv (or its brief form

-C) to enumerate items in the group by Q ID or

--graph (or its brief form

-G) to define the group by a graph pattern.

Defining a group by a list of Q IDs

Let's try using the script by defining the group by enumeration. Download the file

bluffton_presidents.csv from

here into the same directory as the script, using the

Raw button as before. NOTE: if you are using a Mac, it may automatically try to change the file extension from

.csv to

.txt in the

Save As... dialog. If so, change the format to

All Files and change the extension back to

.csv before saving.

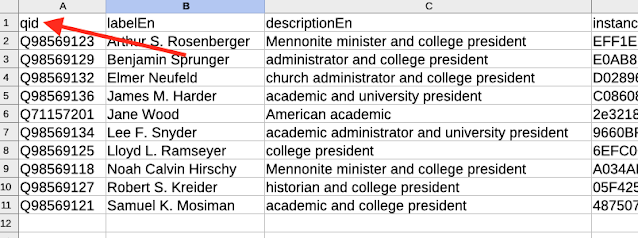

If you open the CSV that you downloaded, you'll see that the first column has the header qid. The script requires that the Q IDs be in a column with this header. The position of that column and the presence of other columns do not matter. The items in the column must be Q IDs, including the initial Q and omitting any namespace abbreviations like wd: .

Run the script again using this syntax:

python count_entities.py --csv bluffton_presidents.csv

Note that the previous output file will be overwritten when you run the script again.

This time the script produces a list of properties appropriate for people.

Defining a list by SPARQL graph pattern

Open your text editor and paste in the following text:

?qid wdt:P108 wd:Q29052.

?article wdt:P50 ?qid.

?article wdt:P31 wd:Q13442814.

The first line limits the group to items whose employer (P108) is Vanderbilt University (Q29052). The second line specifies that those items must be authors of something (P50). The third line limits those somethings to being instances of (P31) scholarly articles (Q13442814). So with this graph pattern, we have defined our group as authors of scholarly articles who work (or worked) at Vanderbilt University.

Save the file using the name graph_pattern.txt in the same directory as the script. Run the script using this syntax:

python count_entities.py --graph graph_pattern.txt

Again, the script will overwrite the previous output file.

This time, the list of properties is much longer because the group is larger and more diverse than in the last example. Despite whatever advice any WikiProjects group may give about best-practices for describing academics, we can see that there is a very small number of properties that are actually given for most of these academic authors. Note that in many cases, given name and family name statements are generated automatically by bots. So if we wanted to create "typical" records, we would only need to provide the top six properties.

If you are unfamiliar with creating SPARQL query graph patterns, I recommend experimenting at the Wikidata Query Service page. The Examples dropdown there shows a lot of examples. However, in most cases, we can define the groups we want with simple graph patterns of only one to three lines.

Examining property use in the wild

Before deciding for sure what properties you want to write/monitor, it is good to know what typical values are for that property. It is also critical to know whether it is conventional to use qualifiers with that property. The count_entities.py script can also collect that information if you use the --prop option (or its brief form -P). I will demonstrate this with the default group (Vanderbilt Fine Arts Gallery works), but you can supply a value for either the --csv or --graph option to define your own group.

One of the most important properties to understand about a group is P31 (instance of). To see the distribution of values for P31 in the gallery, issue this command in your console:

python count_entities.py --prop P31

(or python3 if your installation requires that). The script generates a file whose name starts with the the property ID and ends in _summary.csv (P31_summary.csv in this example). Here's what the results look like:

We can see that most items in the gallery that are described in Wikidata are prints. There is a long tail of other types with a very small number of representatives (e.g. "shoe"). Note that it is possible for an item to have more than one value for P31, so the total count of item by type could be greater than the total number of items.

If any statements using the target property have qualifiers, the script will create a file listing the qualifiers used and the number of items with statements using those qualifiers. In the case of P31, there were no qualifiers used, so no file was created. Let's try again using P571, inception.

python count_entities.py -P P571

The result in the P571_summary.csv file is not very useful.

It listed the 401 different inception dates (as of today) for works in the gallery. However, the P571_qualifiers_summary.csv is more interesting.

This gives me very important information. For most of the 401 dates, they were qualified by defining an uncertainty range using earliest date (P1319) and latest date (P1326). The other commonly used qualifier was P1480 (sourcing circumstances). Examining the property description, we see that P1480 is used to indicate that a date is "circa" (Q5727902). So all three of these qualifiers are really important and should probably be designated to be used with

P571.

For properties that have a large number of possible values (e.g. properties that have unique values for every item), you probably don't want to have the script generate the file of values if all you want to know is the qualifiers that are used. You can get only the qualifiers output file if you use the --qual (or -Q) option (with no value needed to go with it). A good example for this is P217 (inventory number). Every work has a unique value for this property, so there is no reason to download the values for the property. Using the --qual option, I can find out what qualifiers are used without recording the values.

python count_entities.py -prop P217 --qual

The P217_qualifiers_summary.csv file shows that there is a single qualifier used with P217: collection (P195).

Putting it together

The reason for including this post in the series about writing the Wikidata using spreadsheets is that we need to decide what properties, qualifiers, and references to include in the metadata description description of the CSV that we will use to manage the data. So I will demonstrate how to put this all together to create the spreadsheet and its metadata description.

I am interested in adding publications written by Vanderbilt researchers to Wikidata. Since data from

Crossref is easily obtainable when DOIs are known, I'm interested in knowing what properties are used with existing items that have DOIs and were written by Vanderbilt researchers. So the first step is to define the group for the items. The first thing I tried was the graph pattern method. Here is my graph pattern:

?person wdt:P1416 wd:Q16849893. # person affiliation VU Libraries

?item wdt:P50 ?person. # work author person

?item wdt:P356 ?doi. # work has doi DOI.

I tested this pattern at the Query Service with

this query. However, when I ran the script with the

--graph option to determine property use, it timed out.

Determining property use

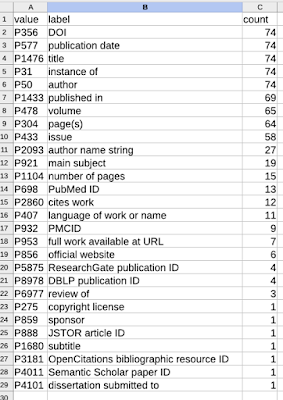

Since Plan A did not work, I moved on to Plan B. I downloaded the results from the query that I ran at the Query Service and put them into a CSV file. I then did a bit of massaging to pull the Q IDs into their own column with the header qid. This time when I ran the script with the --csv option, I got some useful results.

Based on these results I probably need to plan to upload and track the first 10 properties (through author name string). For P31 and P1433, it would probably be useful to see what kind of values are usual, but for the rest I just need to know if they are typically used with qualifiers or not.

The results for P31 indicate that although both scholarly article (Q13442814) and academic journal article (Q18918145) are used to describe these kind of academic publications, scholarly article seems to be more widely used. There were no qualifiers used with P31. Not unexpectedly, a check of P1433 revealed many library-related journals. One item used qualifiers with P1433, but those qualifiers, P304 (pages), P433 (issue), and P478 (volume), appear to be misplaced since those properties are generally used directly in statements about the work.

The only other items with qualifiers were P50 (author, shown above) and P2093 (author name string), which also had the qualifier P1545 (series ordinal). So this simplifies the situation quite a bit -- I really only need to worry about qualifiers with the two author-related terms, which are going to require some special handling anyway.

Creating a config.json file for the spreadsheet

I now have enough information to know how I want to lay out the spreadsheet(s) to contain the data that I'll upload/manage about journal articles. To understand better how to structure the

config.json file that I'll use to generate the spreadsheets and metadata description file, I looked at

one of the articles to help understand the value types for the properties.

The style of the values on the page help me to know the value type. The item values are hyperlinked text. The string values are unlinked black text. Monolingual text values look like strings, but have their language following them in parentheses.

To decide about the number of spreadsheets needed, I thought about which properties were likely to have multiple values per article item. Both author (item) and author name string could have multiple values. So I put them into separate spreadsheets. The rest of the properties will probably have only one value per article (or at least only one value that I'm interested in tracking). So here is what the overall structure of the config.json file looks like:

{

"data_path": "",

"item_source_csv": "",

"item_pattern_file": "",

"outfiles": [

{

"manage_descriptions": true,

"label_description_language_list": [

"en"

],

"output_file_name": "articles.csv",

"prop_list": [

]

},

{

"manage_descriptions": false,

"label_description_language_list": [],

"output_file_name": "authors.csv",

"prop_list": [

]

},

{

"manage_descriptions": false,

"label_description_language_list": [],

"output_file_name": "author_strings.csv",

"prop_list": [

]

}

]

}

I don't want to manage descriptions on the two author-related CSVs, and am only including the labels to make it easier to identify the article. I'm only working in English, so that also simplifies the label situation.

Here are a few of the property descriptions that I used that illustrate several value types for the statement properties:

{

"pid": "P31",

"variable": "instance_of",

"value_type": "item",

"qual": [],

"ref": []

},

{

"pid": "P356",

"variable": "doi",

"value_type": "string",

"qual": [],

"ref": [

{

"pid": "P854",

"variable": "referenceUrl",

"value_type": "uri"

},

{

"pid": "P813",

"variable": "retrieved",

"value_type": "date"

}

]

},

{

"pid": "P577",

"variable": "published",

"value_type": "date",

"qual": [],

"ref": [

{

"pid": "P854",

"variable": "referenceUrl",

"value_type": "uri"

},

{

"pid": "P813",

"variable": "retrieved",

"value_type": "date"

}

]

},

{

"pid": "P1476",

"variable": "title_en",

"value_type": "monolingualtext",

"language": "en",

"qual": [],

"ref": [

{

"pid": "P854",

"variable": "referenceUrl",

"value_type": "uri"

},

{

"pid": "P813",

"variable": "retrieved",

"value_type": "date"

}

]

},

Following typical practice, I'm skipping references for P31 (instance of). The rest of the properties only have reference properties for P854 (reference URL) and P813 (retrieved). Some existing items may have references for P248 (stated in), but since I'm going to be getting my data from Crossref DOIs, I'll probably just use the URL form of the DOI in all of the references. So I'll only use a column for P854. Notice also that the P1476 (title) property must have the extra language key/value pair since it's a monolingual string. If the title of the journal isn't in English, I'm stuck but I'll deal with that problem later if it arises.

The final version of my config.json file is

here. I will now try running the

convert_json_to_metadata_schema.py script discussed in the last post to generate the headers for the three CSV files and the metadata description file so that I can test them out.

Test data

To test whether this will work, I'm going to manually add data to the spreadsheet for an old article of mine that I know is not yet in Wikidata. It's

https://doi.org/10.1603/0046-225X-30.2.181 . Here's

a file that shows what the data look like when entered into the spreadsheet. You'll notice that I used the DOI as the reference URL. As I said in the last section, I intend to eventually automate the process of collecting the information from Crossref, but even though I got the information manually, the DOI URL will redirect to the journal article landing page, so anyone checking the reference will be able to see it in human-readable form. So this is a good solution that's honest on the data source and that also allows people to check the reference when the click on the link.

Please note that I did NOT fill in the author CSV yet, even though I already know what the author items are. The reason is that if I filled it in without the article item Q ID in the

qid column, the VanderBot API-writing script would create two new items that consisted only of author statements about unlabeled items. Instead, I created the item for the article first, then added the article Q ID in the

qid column for both author rows in the

authors.csv file. You can see what that looks like in

this file. Since I knew the author item Q IDs for both authors, I could put them both in the authors.csv file, but if I had only known the name strings for some or all of the authors, I would have had to put them in the

author_strings.csv file, again along with the article Q IDs after the article record had been written.

The final product seems to have turned out according to the plan. The page

is here.

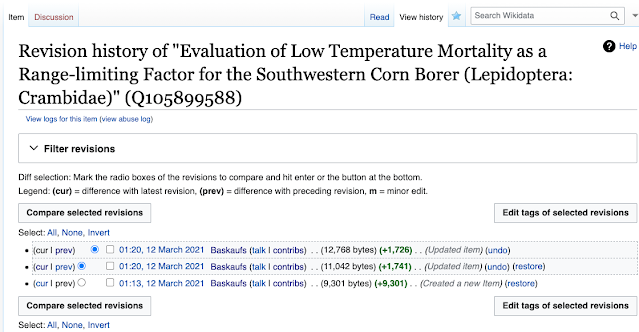

If we examine the page history of the new page, we see that there were two edits. The two smaller, more recent ones were the two author edits and the first, larger edit was the one that created the original item.

What's next?

You can try using the config.json file to generate your own CSV headers and metadata description files if you want to try uploading a journal article yourself. Just make sure that it isn't already in Wikidata. You can also hack the config.json file to use different properties, qualifiers, and references for a project of your own. I do highly recommend that you try writing only a single item at first so that if things do not go according to plan, the problems can easily be fixed manually.

Although we have now set up spreadsheets and a metadata description JSON file that can write data to Wikidata, there is still too much manual work for this to be productive. In subsequent posts, I'll talk about how we can automate things we have thus far been doing by hand.

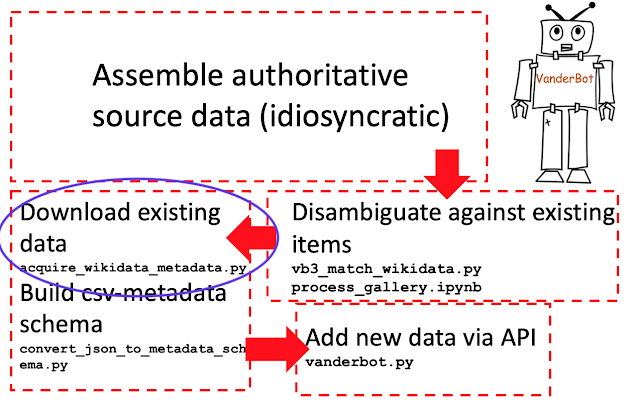

The diagram above shows the general workflow that I've been using in the various projects with which I've used the spreadsheet approach. We have basically been working backwards through that workflow, so in the next post I will talk about how we can use the Query Service to download existing data from Wikidata so that we don't duplicate any of the items, statements, or references that already exist in Wikidata.

The image above is from a presentation I gave in Feb 2021 describing the "big picture", rationale, and potential benefits of managing data in Wikidata using spreadsheets. You can view that video

here.

No comments:

Post a Comment