For a video walk-through of the previous blog post and this one, see this page.

In the previous post, I described how to create a Wikimedia bot password and use it to write spreadsheet data to the test Wikidata instance: https://test.wikidata.org/. The process required setting up a JSON metadata description file that mapped the CSV column headers to the RDF variant of the Wikibase data model. The VanderBot Python script used that mapping file to "understand" how to prepare the CSV data to be written to the Wikidata API. The script also recorded its interactions with the API by storing identifiers associated with the knowledge graph entities in the CSV along with the data.

This post will continue in the "do it yourself" vein of the previous post. In order to successfully complete the activities in this post, you must:

- have a credentials plain text file (prepared in the last post)

- have Python installed and know how to run a script from the command line (Python programming skills not required)

- have downloaded the VanderBot script to a directory on your local drive where you plan to work.

- understand that the edits you make are your responsibility just as if you had made them using the graphical interface. If you mess something up, you need to fix it -- mostly likely manually since VanderBot is designed to upload new data, not change or delete existing data.

- have practiced on the test Wikidata instance enough to feel comfortable using VanderBot to make edits.

If any of these things are not true, then you need to go back and read the

first blog post to prepare.

Options when running the script

In the last post, we practiced with VanderBot using all of the settings defaults. However, you may want to change some of those defaults depending you your situation. The most obvious change is to suppress the display of the giant blobs of response JSON from the API that fly up the screen as the script runs. You can redirect most of the output to a log file using the --log option. The log file will record the JSON output and at the end will include a summary of known errors that occurred throughout the writing process. (The same error report will be shown on the console screen, too.) You may choose to ignore the log file most of the time -- it will simply be overwritten the next time the script is run. However, it may be useful if the script terminates due to an error.

Most of the other options allow you to designate different file names or locations for the metadata description file and credentials file. It may be convenient to keep the credentials file in the same directory as the other files (the working directory option), but if you are using version control (e.g. GitHub) you should keep it elsewhere. You may wish to use different file names if you have multiple bot passwords or have different metadata description files for different CSVs.

The --update option is used to control whether the label and descriptions in the CSV will overwrite different values for existing items in Wikidata. It defaults to suppress updates, but we will talk later about when you might want to use a different option.

Options:

long form short values default

--log -L log filename, or path and appended none

filename

--json -J JSON metadata description filename "csv-metadata.json"

or path and appended filename

--credentials -C name of the credentials file "wikibase_credentials.txt"

--path -P credentials directory: "home", "home"

"working", or path with trailing "/"

--update -U "allow" or "suppress" automatic "suppress"

updates to labels and descriptions

Option examples:

Note: some installations of Python require using python3 instead of python in the command.

python vanderbot.py --json project-metadata.json --log ../log.txt

Metadata description file is called project-metadata.json and is in the current working directory. Progress and error logs saved to the file log.txt in the parent directory.

python vanderbot.py -P working -C wikidata-credentials.txt

Credentials file called wikidata-credentials.txt is in the current working directory.

python vanderbot.py --update allow -L update.log

Progress and error logs saved to the file update.log in the current working directory. Labels and descriptions of existing items in Wikidata are automatically replaced with local values if they differ. Notice that the long and short forms of the options can be mixed and are interchangeable.

Writing to the "real" Wikidata

Once you have set everything up, it is a simple matter to switch from writing from the test.wikidata.org API to the "real" www.wikidata.org API. All that is necessary is to change test to www in the first line of the configuration file:

endpointUrl=https://www.wikidata.org

The username and password lines can stay the same.

However, we cannot use the same CSV and metadata description files as before because the property and item IDs are different in the real Wikidata. We also don't yet want to create new items until we are comfortable with making several edits in the real Wikidata. Fortunately, there are several items in Wikidata that are designated as "sandbox" items, i.e. their metadata can be change to anything by anyone without consequence. They are generally lightly used, so you can edit them and still have time to examine what you have done before someone changes them to something else. The first sandbox item (

Q4115189) is better known than the other two, so we will use sandbox items 2 and 3 in our practice.

Wikidata sandbox items:

Q ID Label

-------- ------------------

Q4115189 Wikidata Sandbox

Q13406268 Wikidata Sandbox 2

Q15397819 Wikidata Sandbox 3

With respect to etiquette regarding the sandbox items, I don't know that there are particular rules, but I would say that it would not be acceptable to change their labels, since that is the primary means by which users will know what they are. I would say that anything else, including descriptions and aliases is probably open to editing.

I would avoid adding a large number of statements to the sandbox items and then just leaving them, although a few edits probably don't matter. Probably the best thing to do after you are done playing with editing the sandbox items is to go the the View history page and undo your change (if you've only made one edit) or restore the last previous version before you started playing with the item if you've made a lot of changes. I'll show how to do that when we get to that point.

After you are comfortable playing with the sandbox items, we will try adding new real items.

Describing a CSV using simpler JSON format

We could use the web tool again to create a new metadata description file based on the real Wikidata properties, but I will tell you about another tool that you can use that requires many fewer button clicks. I created a simplified configuration file format that can be used to generate the standard metadata description file based on some rules about how to construct the column header names, assumptions about labels and descriptions, and one simplification of references. (The detailed specifications for the configuration file format are here.) The configuration file that we will be using has the default name config.json and can be viewed in this gist.

It is not necessary for you to edit this file to use it for the practice exercise. You can simply download it (right-click on the Raw button and select Save file as...). Download it into a directory you can access easily from your home folder -- you can use the same one you used last time, although it might get a bit cluttered.

If you understand JSON, the file structure will make sense to you. Even if you don't, you can probably copy and paste parts of it to change it to fit your needs. (It includes examples of most of the object types including two we haven't used before: monolingual text and quantity.) If you copy and paste, you will mostly need to be careful about placement of commas. Indentation is optional in JSON and is only used to make the structure more apparent.

For now, we can ignore the first three key:value pairs. The rest of the JSON after outfiles describes two CSV files that will be mapped by the metadata description file: artworks.csv and works_depicts.csv . artworks.csv contains data about statements involving 5 properties: P31 (instance of), P217 (inventory number), P1476 (title), P2048 (height), and P571 (inception). depicts.csv contains data about only one kind of statement: P180 (depicts). You may be wondering why I chose to put the depicts statements in a separate CSV. That is because all of the other properties will typically have only one value per item, while a particular artwork may depict several things. So in the first CSV, there will only be one row for each item, while in the second CSV there is an indefinite number of rows per item.

{

"pid": "P571",

"variable": "inception",

"value_type": "date",

"qual": [

{

"pid": "P1319",

"variable": "earliest_date",

"value_type": "date"

},

{

"pid": "P1326",

"variable": "latest_date",

"value_type": "date"

}

],

"ref": [

{

"pid": "P248",

"variable": "statedIn",

"value_type": "item"

}

]

}

Each property has a pid (property ID), a column header name (variable) and a value_type. The value_type will determine the details of the number of data columns needed to represent that kind of value and the kind of data that will be stored in those columns. Each property can also have zero or more qualifier properties and zero or more reference properties associated with it. In the snippet above, inception (P571) statements will have two associated qualifier (earliest date and latest date) properties and one reference property (stated in). The Wikibase model allows many references per statement, but this configuration file format restricts you to a single reference with as many properties as you want.

The structure of the qualifier and reference properties are the same as the statement properties (ID, variable, and value type) with the only restriction being that you must use properties that are appropriate for use in qualifiers or references.

{

"manage_descriptions": true,

"label_description_language_list": [

"en",

"es"

],

"output_file_name": "artworks.csv",

"prop_list": [

The situation with labels and descriptions is a little more complicated. If you have more than one data table, you probably only really want to manage the labels and descriptions in one of the tables. In this case, it would make the most sense to manage them in the artworks.csv table, since it has a row for every item and the other table may have zero or more than one row per item. So the manage_description value for the first table is set to true.

{

"manage_descriptions": false,

"label_description_language_list": [

"en"

],

"output_file_name": "works_depicts.csv",

"prop_list": [

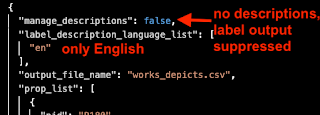

In the second table (works_depicts.csv) the manage_descriptions value is set to false. In that table, there will be a label column, but it will be set to be ignored during CSV processing and will only be to help humans understand what is in the rows. The label_description_language_list value contains a list of the ISO language codes for all languages to be included. If manage_description is set to true for a table, there will be both a label and description in the table for every language. If it is set to false for a table, there will only be a label for the default language. The default language of the suppressed output labels is set by the --lang option (see below). Any languages supplied in the JSON (as in the example above) will be ignored.

Generating the metadata description file and CSV headers

To generate the metadata description files and the CSV files, we need to download another script from GitHub called convert_json_to_metadata_schema.py . Download it into the same directory where you downloaded the config.json file. At the command line, run the following command if you used the default name config.json

python convert_json_to_metadata_schema.py

If you saved the input configuration file with a different name, or if you want a different name than csv-metadata.json to be used for the output metadata description file, use the following command line options:

long form short values default

--config -C input configuration file path config.json

--meta -M output metadata description fille path csv-metadata.json

--lang -L language of labels when output suppressed en

After you run the script, it will have generated the csv-metadata.json file and also variants of the two CSV files that were specified in the input config.json file: artworks.csv and works_depicts.csv . To prevent accidentally overwriting any existing data, the letter "h" is prepended to the file names of the generated CSVs (hartworks.csv and hworks_depicts.csv). So before you use the files, you need to delete the initial "h" from the file names.

The generated CSV files contain only the column headers with no data. But you can still open them with your spreadsheet software to look at them.

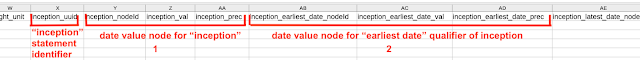

If you compare the columns in the created spreadsheet with the source JSON configuration file, you should see that the columns are in the order that they were designated in the JSON. The variable values are joined to any parent properties by underscores (e.g. earliest_date appended to inception to form inception_earliest_date). In cases where more than one column is required to describe a value node, the _nodeId, _val, etc. suffixes are added to the corresponding root column

Since we chose true as the value of manage_descriptions for the artworks.csv file, the generated spreadsheet includes both labels and descriptions for the two languages we designated. The script automatically prepends label_ and description_ to the language codes to generate the column headers.

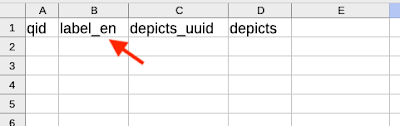

For the second spreadsheet, works_depicts.csv, the value of manage_descriptions is false, so only labels are generated. Since the label_en column is only for local use to make the identity of the rows clearer, I only bothered to generate it as English. The value of the labels in this spreadsheet will be ignored by the API upload script.

Adding data to the CSV files

Since we are still testing, we won't create new items yet in the real Wikidata. Instead, we will add statements to two of the sandbox Wikidata items.

In the qid column of the artworks.csv file, add Q13406268 and Q15397819 to the first two rows after the header row. For purposes of keeping the row identities clear, I added Wikidata Sandbox 2 and Wikidata Sandbox 3 as label_en values for those rows, although since we will be using the default to suppress updating labels, these values will have no effect. I also chose to use Q3305213 (painting) and Q860861 (sculpture) as values of instance_of since the the CSV file is supposed to be about artworks.

If you want to see what other values I used in my test, you can look at or download

this gist. You can use whatever values would amuse you as long as the types of the values match the types that are appropriate for properties specified in the configuration file. Leave all of the ID columns blank (those ending in

_uuid,

_hash, or

_nodeId), since they will be filled in by the API upload script. For the dates, you can either use the abbreviated conventions discussed in the last post (in which case you MUST leave the

_prec column empty). If you want to use dates that don't conform to those patterns (precisions less than year, BCI dates, or dates between 1 and 999 CE), you will need to use the long form values and provide an appropriate

_prec value. See the

VanderBot landing page for details.

If you looked at the configuration JSON carefully, you may have noticed that there were two new value types that we didn't see in the last post. The title property (P1476) has the type monolingualtext. Monolingual text values are required to have a language tag in addition to a provided string. Unfortunately, because of limitations of the W3C CSV2RDF Recommendation, the language tag has to be hard-coded in the metadata description file rather than being specified in the CSV. That's why the language is specified in the configuration JSON as the value of language for that property rather than as a column in the CSV table.

The other new value type is quantity. Like dates, quantities have value nodes that require two columns in the CSV table to be fully described. The _val column contains a decimal number and the _unit column should contain the Q ID for an item that is an appropriate measurement unit for the number (e.g. Q11573 for meter).

The second spreadsheet, works_depicts.csv, describes only one kind of statement, depicts (P180). It is intended to have multiple rows with the same qid, since a work can depict more than one thing. Since I described the Sandbox 2 item as a painting with title "Mickey Mouse house", I decided to say that it depicts Mickey and Minnie Mouse. You can set the depicts values to any item.

Writing the data

Before writing the data in the CSVs, open the pages for

Sandbox 2 and

Sandbox 3 so that you can see how they change when you write. Make sure that the two CSV files, the

csv-metadata.json file you generated from

config.json, and a copy of

vanderbot.py are together in a directory that can easily be accessed from your home directory. Make sure that you removed the "h" from the beginning of the CSV filenames as well.

Open your console application (Terminal on Mac, Command Prompt in Windows), navigate to the directory where the files are, and run VanderBot. Unless you changed default file names and locations, you can just enter

python vanderbot.py

(or use python3 if your installation requires that). If you want to save the API response in a log file, specify its name using the --log (or -L) option, like this:

python vanderbot.py -L log.txt

When you run the script, you should see something like this (with logging to file):

There are two episodes of writing to the API, one for each of the CSVs. If you refresh the web pages for the two items, you should see the changes that you made.

Click on the View history link at the top of the Wikidata Sandbox 2 page. You will see the revision history for the page.

Notice that on Sandbox 2, there were three edits listed. Each line in a spreadsheet resulted in one write to the API. The first larger one (4997 bytes) was an update consisting of all of the statements made in the first line of artworks.csv . The two later and smaller ones were from the two single-statement depicts writes in the works_depicts.csv table.

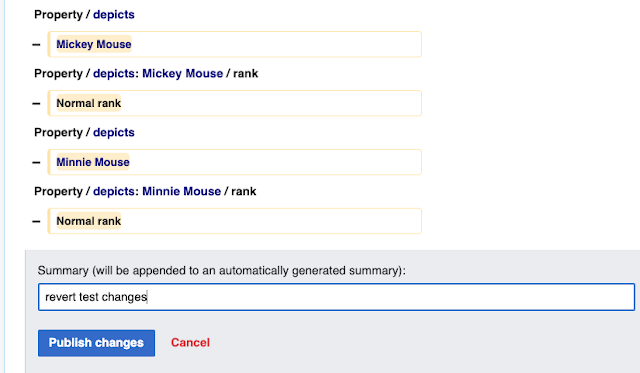

It is not a requirement to get rid of all of your edits to the sandbox, but to avoid causing the sandbox items to be hopelessly cluttered, you should probably delete your edits. If you made only a single change to the page, you can just click the undo link after the edit. If you made several edits and your edits were the last ones, you can revert back to the last version before your changes by clicking on the restore link after the last edit that was made prior to yours.

The restore dialog will show you all of the changes you made so that you can review them before committing to the restore. Give a summary, and click Publish changes.

Changing labels

VanderBot handles labels and descriptions differently from statements and references.

Adding statements or references is controlled by the presence or absence of an identifier corresponding to the column(s) representing the statement or reference in the spreadsheet. The statements or references are only written if their corresponding identifier cell is empty. If you examine the CSVs after their data have been written to the API, you will see that identifiers have been added for all of the columns that contain statement values. That means that if you run the script again, nothing will happen because VanderBot will ignore the values -- they all have assigned identifiers.

The behavior of labels and descriptions is different. When a new item is created, any labels or descriptions that are present will be added to the item. However, VanderBot will NOT make any edits to labels or descriptions of existing items unless the the --update (or -U) option is set to allow when the script is run. If updating is allowed, the existing labels and descriptions will be changed to whatever is present in the spreadsheet for that item. (The exception to this is when a label or description cell is empty. Empty cells will not result in deleting the label or description.)

From the screenshot above, you can see that at the start of this experiment, Sandbox 2 had no descriptions in either Spanish or English. Also, the Spanish label isn't actually in Spanish. I'm going to use VanderBot to change that.

To make the changes, I started with the

artworks.csv spreadsheet after my last edits. I deleted the line for Sandbox 3 since I didn't want to mess with it. I first made sure that my English label was exactly the same as the existing label so that it won't be changed. Then I added a Spanish label, and English and Spanish descriptions. I left the rest of the row the way it was since none of those statements would be written since they all had IDs.

The following command will write the labels and log to a file:

python vanderbot.py -L log.txt --update allow

After the script finishes, checking the log file shows the changes made.

Since the English label was identical, there were no changes to it. The log also shows that there were no changes in the other CSV.

Checking the web page shows

Since I'm done with the test, I'm going to delete the descriptions, but the Spanish label isn't any worse than what was there before, so I'll leave it. Checking the history, I can see that all of the labels and descriptions were changed in a single API write, so I'll have to delete them manually if I want to leave the Spanish label -- I can't undo the description changes without also undoing the new Spanish label.

The take-home message from this section is that you need to make sure that the existing labels and descriptions in the CSV match what is in Wikidata when label/description updates are allowed (unless you actually want to change them). This is particularly an issue if your data table is stale because you are coming back to work on it at a much later time after you initially wrote the data. If in the intervening time other users have improved the quality of the data by changing labels and descriptions, you would essentially be reverting their changes back to a worse state. That would be really irritating to someone who put in work to make the improvements. I will talk about strategies to avoid this problem in a later post.

Creating new items

At this point, you are hopefully comfortable with VanderBot enough to create or edit real items in the real Wikidata. For now, let's stick with creating new items since editing existing items has the issue of avoiding creating duplicate statements. We will address problem that in a future post.

There are several issues that you should consider before creating new items for testing. One is that you really should only create items that meet some minimal standard of notability. The actual

notability requirements for Wikidata are so minimal that you could theoretically create items about almost anything. But as a practical matter, we really shouldn't just create junk items that don't have some relatively useful purpose. One type of item that seems to be relatively "safe" is university faculty, since they generally have the potential to be authors of academic works that could potentially be references for Wikipedia articles. When I'm testing VanderBot, I often add faculty from my alma mater, Bluffton University, since none of them were in Wikidata until I started adding them.

The second issue is that you should create items that have enough information that the item can actually be unambiguously identified. There are several really irritating categories of items that have been added to Wikidata without sufficient information. There are thousands of "Ming Dynasty person" and "Peerage person" items that have little but a name attached to them. They are pointless and just make it harder to find other useful items with similar labels. So, for example, if you add faculty to Wikidata, at a minimum you should include their university affiliation and field of work.

The third issue is that you should make sure that you are not creating a duplicate item. In a future post I will talk about strategies for computer-assisted disambiguation. But for now, just typing the label in the search box in the Wikidata is the easiest way to avoid duplication. Try typing it with and without middle initials and also with and without periods after the initials to make sure you tried every permutation.

Configuring the properties

If you want to try my strategy of practicing by creating faculty records, you can start with this

template configuration file. It contains the obligatory

instance of (P31) that should be provided for every item, and

sex or gender (P21), which despite its issues is probably the most widespread property assigned to humans. I did not provide reference fields for those two properties since they are commonly given without references. The other two properties are probably the minimal properties that should be supplied for faculty:

employer (P108) and

field of work (P101). One reason why I chose these two properties is because they can both be determined easily from a single source, the

Bluffton University faculty web listing. The statements for these two properties should definitely have references.

I've done some querying to try to discover what the most commonly used properties are for references. A key reference property is retrieved (P813). All references should probably have this property. The other property is usually an indication of the source of the reference. Commonly used source properties are: reference URL (P854, used for web pages), stated in (P248, used when the source is a described item in Wikidata with a Q ID value), and Wikimedia import URL (P4656, used when the data have been retrieved from another Wikimedia project with a URL value). Unless you are working specifically on a project to move data from another project like Wikipedia to Wikidata, the first two are the ones you are most likely to use. Since all of my data are coming from a web page, I'm using P854 and P813 as the reference properties for both of the statement types that have references.

Use the convert_json_to_metadata_schema.py Python script and the config.json file you downloaded to generate the metadata description file csv-metadata.json and the faculty.csv CSV file (with header row only).

Adding the data

I chose two of the faculty from the web page list and pasted their names into the Wikidata search box to make sure they weren't already existing items. I then added their names to the

label_en column of

faculty.csv and described them in the

description_en column. See

this gist for the examples.

Q5 is the value for

instance_of for all humans.

Sex or gender options are given on the

P21 property page. All of the faculty work at Bluffton University (

Q886141). The trickiest value was their

field of work, which I had to determine using the Wikidata search box.

I was then able to copy and paste the URL for the faculty web listing, https://www.bluffton.edu/catalog/officers/faculty.aspx, into the reference URL columns and today's date, 2021-03-07, into all of the _retrieved_val columns. I then saved the file.

Writing the data to the API

Note: what would happen if you tried to use my example CSV file to write to Wikidata without changing its contents? Before the VanderBot script tries to write a new record to the real Wikidata, it checks the Wikidata Query Service to see if there are already any items with exactly the same labels and descriptions in any language in that row. If it finds a match, it logs an error and goes on to the next row. So since those items were already created by me, VanderBot will do nothing as long as no one has changed either the label or description for the two example items. If a label or description for either of them has been changed since I created the items, then the API will create duplicate items that will need to be merged later. So don't try running the script with my unmodified example files unless you first check that the labels and descriptions are still exactly the same on the items' Wikidata item pages.

I ran the vanderbot.py script with logging to a text file.

When writing the statements, the two rows were identified as new records. When the rows were later checked for any new unwritten references, the Q IDs were already known since they had been reported in the API response.

To see how the

faculty.csv file looked after its data were written to the API, see

this gist.

I could check for the new item pages in Wikidata by either searching for the faculty names or by directly using the two new Q IDs.

The new page contains all of the data from the CSV table in the appropriate place!

Although for only two items this work flow probably took longer than just creating the records by hand, it doesn't take many more items to make this process much faster, particularly if references are added (and they should be!). Adding references requires many button clicks on the graphical web interface, but because the same reference can be added to many rows of the spreadsheet with a single copy and paste, it is very efficient to add references using the VanderBot script.

What's next?

In the

next post, I'll talk about how you can determine what properties are most commonly used for various types of items. This is important information when you are planning your own projects that involve adding a lot of data to Wikidata.

No comments:

Post a Comment