Warning: this blog post involves extreme hand-holding. If that irritates you and you want to try to figure out how to use VanderBot on your own without hand-holding, you can go straight to the VanderBot landing page and look at the very abbreviated instructions there. However, make sure that you understand your responsibilities as a Wikidata user. If they are unclear to you, read the "Responsibility and good citizenship" section below.

On the other hand, if you love extreme hand-holding, there is a series of videos that will essentially walk you through the steps in this post.

It has been almost a year since I last wrote about my efforts to write to Wikidata using Python scripts. At that time, I was using a bespoke set of scripts for a very specific purpose: to create or upgrade items in Wikidata about researchers and scholars at Vanderbilt University. I was feeling pretty smug that I actually got the scripts to work, but at that point the scripts were pretty idiosyncratic. They were limited to a particular type of item (people), supported a restricted subset of property types, and used a particular spreadsheet mapping schema that wasn't easily modified.

Since that time, I have been working to adapt those scripts to be more broadly usable and have been testing them on several other projects: WikiProject Vanderbilt Fine Arts Gallery and WikiProject Art in the Christian Tradition (ACT), and several smaller ones. The scripts and my ability to explain how to use them have now evolved to the point where I feel like they could be used by others. The goal of this series is to make it possible for you to try them out in a do-it-yourself manner.

Background

This series of posts will not dwell on the conceptual and technical details except where necessary for you to make the scripts work. For those interested in more details, I refer you to previous things I've written:

It is not necessary to refer to any of this material in order to try out the system. But those interested in the technical details may find the links helpful.

Do I want to try this?

Before going any further, you should assess whether it is worth your time trying this out.

Requirements:

- You need to know how to use the command line to navigate around directories and run a program. See this page for Mac or this page for Windows if you don't know how to open a console and issue basic commands. In particular, read the section on "Running a program using the command line".

- You need to have Python installed on your computer so that you can run it from the command line. See this page for installation instructions. You do NOT need to know how to program in Python. I believe that the only module used in the script that is not part of the standard library is requests, so you may need to install that if you haven't already.

- You need to have an application to open, edit, and save CSV files. The recommended application is LibreOffice Calc. Other alternatives are OpenOffice Calc and Excel, but there are situations where you can run into problems with either of them. For information on CSV spreadsheets and how to save them in Excel, see this video. For a deeper dive and description of the problems with Excel and OpenOffice Calc, see the first video in this lesson and the screenshots after the second video.

- You must have a Wikimedia user account. The same user account is used across Wikimedia platforms, including Wikipedia, Wikidata, and Commons, so if you have an account any of those places you can use it here.

- You need to be familiar with the Wikidata graphical editing interface. I assume that every reader has already done enough editing to understand the important features of the Wikibase model (items, properties, statements, qualifiers, and references) and how they are related to each other. They will not be explained in this post, so if you don't already have experience exploring these features using the graphical interface, you are probably not adequately equipped to continue with this exercise.

Other alternatives you should consider

There are a number of good alternatives to using the VanderBot scripts to write to Wikidata. They are:

- Use the graphical interface at https://www.wikidata.org/ to edit items manually. Advantage: very easy to use and robust. Disadvantage: slow and labor intensive.

- Use QuickStatements (https://www.wikidata.org/wiki/Help:QuickStatements). Advantage: very easy to use and robust, particularly when used as an integrated part of other tools like Scholia "missing". Disadvantage: there is a learning curve for constructing the input files from scratch. Users who aren't familiar with how CSV files work may find it confusing.

- Use the Wikidata plugin with OpenRefine (https://www.wikidata.org/wiki/Wikidata:Tools/OpenRefine). Advantage: powerful and full-featured, I believe that some scripting is possible to the extent that scripting is generally possible in OpenRefine using GREL. Disadvantage: requires skill with using OpenRefine plus an additional learning curve for figuring out how to make the Wikidata plugin work, not sure whether it is possible to integrate OpenRefine with command line-based workflows involving other applications.

- Use PyWikiBot (https://pypi.org/project/pywikibot/) or Wikidataintegrator (https://github.com/SuLab/WikidataIntegrator). Many (most?) Wikidata bots are built using one of these two Python frameworks. Advantage: powerful, full featured, robust. Disadvantage: you need to be a relatively experienced Python coder who understands object oriented programming with Python to use these libraries. There are a number of bot-building tutorials for PyWikiBot online. However, when starting out, I found the proliferation of materials on the subject confusing and for both of these platforms, when I couldn't get things to work, the libraries were so complex I couldn't figure out what was going on. Professional developers would probably not have that problem, but as someone who is self-taught, I was confused.

Factors that might make using VanderBot right for you

If any of the following situations apply to you, VanderBot might be useful for you:

- Your data are already in spreadsheets or can be exported as spreadsheets and you would like to keep the data in spreadsheets for future reference, ingest, or editing using off-the-shelf applications like Libre Office or Excel.

- You want to keep humans in the Wikidata data entry loop for quality assurance, but want to increase the speed at which edits can be made.

- Over time, you are interested in keeping versioned snapshots of the data that you have written in a format that is suitable for archival preservation (CSV).

- You are interested in comparing what is currently in Wikidata with what you put into Wikidata to discover beneficial information added by the community or to detect vandalism by bad actors.

- You want to develop a workflow based on command-line tools that can be scheduled and monitored by humans.

The last of these two features are not yet fully developed, but I'm trying to design the scripts I'm writing to make them possible in the future.

If one or more of these factors applies to you and the other existing tools don't seem better suited for your purposes, then let's get started.

Responsibility and good citizenship

This "lesson" involves using the VanderBot API uploading script to write data to the test Wikidata API (application programming interface). In order to do that, you will need to create a bot password, but not a separate bot account. So let's clarify exactly what that means.

User account and bot password

When you create a bot password, you should be logged in under your umbrella Wikimedia account. That account applies across the entire Wikimedia universe: Wikipedia, Wikidata, Commons, and other Wikimedia projects. The bot password you create allows you to automate your interactions by using any Wikimedia API, but the edits that you make will be logged to your user account. That means that you bear the same responsibility for the edits that you make using the bot password as you would if you made them using the graphical interface or QuickStatements. Edits that you make using the bot password will show up in the page history just as if you had made them manually. If you make a mess using the bot password, you are responsible for cleaning it up just as you would be if you made errors using any other editing method. The whole point of scripting is to allow you to do things faster and easier, but the down side of that is that you can also make mistakes faster and easier as well.

Because of the potential for disaster, we will start by using Wikidata's test instance:

https://test.wikidata.org/ . It behaves exactly like the "real" Wikidata, except that the items and properties there do not necessarily correspond to anything real. If you make a mess in test.wikidata.org, you do NOT have to clean it up -- that's the whole point of it. So it is a place we can experiment without risk and once we feel comfortable, we can easily move to using the "real" Wikidata.

Distinction between a User-Agent and user account

In the Wikimedia world, typically when one creates an autonomous bot (one that works without human intervention), a separate bot user account is created. That account is used with a particular application (script, program) that carries out the bot's defined task. However, VanderBot is not an autonomous bot and has no particular defined task. It is a general-purpose script that can be used by any account to make human-mediated edits. So we need to draw a distinction between the application (technical term: User-Agent) and the user account. There actually is a user account called VanderBot (

https://www.wikidata.org/wiki/User:VanderBot). It is operated by me and it shows up as the user who made the edits when I use it with the API-writing script. But you can't use it because you don't have the account credentials -- edits that you make will be made under your own user account. On the other hand, regardless of the user account responsible for the edits, the VanderBot Python script will identify itself to the API as the software that is mediating the interaction between you and the API. Software that manages communications between a user and a server is called a

User-Agent.

You can think of this situation as similar to the difference between your web browser and you. Your web browser is not responsible for the actions that you take with it. If you use Firefox to write the world's best Wikipedia article, the Mozilla Foundation that created Firefox doesn't get credit for that. If you use Chrome to buy drugs or organize an assassination, Google, which created Chrome, does not take responsibility for that. On the other hand, if your browser has a bug that causes it to repeatedly hit a website and create a denial of service problem for a web server, the website may use the User-Agent identification for the browser to either block the browser or to contact the browser's developer to ask them to fix the bug.

VanderBot, the User-Agent, has features that prevent it from doing "bad" things to the API, like making requests too fast or not backing off when the server says it's too busy. As the programmer, I'm responsible for those features. I am not responsible if you write bad statements, create duplicate items, or overwrite correct labels and descriptions with stupid ones. Those mistakes will be credited to your user account. On the other hand, if you significantly modify the VanderBot API-writing script (which you are allowed to do under its GNU General Public License v3.0), then you should change the value of its user_agent_header variable with your own URL and email address, particularly if you mess with its "good citizen" features and settings.

Do you need a bot user account and flag?

Wikidata has a bot policy, which you can read about

here. However, that policy defines bots as "tools used to make edits without the necessity of human decision-making". By that definition, VanderBot is not technically a bot since its edits are under human supervision (it's not autonomous). That's good, because it means that you can use it without going through any bot approval process, just as if you had used QuickStatements or OpenRefine to make edits.

However, not having bot approval also places rate limitations on interactions with the API. User accounts without "bot flags" (granted after successfully completing the approval process) are limited to 50 writes per minute. Writing at a faster speed without a bot flag will cause the API to block your IP address. This is the primary limitation on the speed of writing data with VanderBot and a delay (api_sleep) is hard-coded in the script.

Note that bot approval and bot flags are granted to accounts, not User-Agents. If you use the VanderBot API-writing script along with other scripts as part of a defined automated process, you should set up a separate bot user account. You could then get a bot flag for that particular account and purpose, and remove the speed limitation from the script. I don't know if VanderBot is actually ready for that kind of use at this point. So you're on your own there.

The short answer to the overall question of whether you need a separate bot account is usually "no".

Generating a bot password

If you understand your responsibilities and have decided that experimenting with VanderBot is worth your time, let's get started on the DIY part. Because the bot password you create can be used across Wikimedia sites, I will illustrate the password creation process at

https://test.wikidata.org/ since that is where we will first use it.

The test Wikidata instance looks similar to the regular one, except that the logo in the upper left is in monochrome rather than color. The functionality is identical. Click on the Special pages link in the left pane.

On the Special pages page, click on the Bot passwords link in the Users and rights section.

On the Bot passwords page, enter a name for the bot password. It is conventional to include "bot" or "Bot" somewhere in the name of a bot. However, since this password is actually going to be associated with your own user account and not a special bot account, including "bot" in the name is not that important. In the past when bot passwords were only associated with particular Wikimedia sites, it was more important to have mnemonic names to keep bots for different sites straight. However, since you can use the same bot across sites, this is no longer important.

There are actually two reasons why you might want to use multiple, differently-named bot passwords. One is that different passwords can have different scope restrictions (see next step). So one password might only be able to perform certain actions, while another might be less restricted. The other reason is that if a particular password is being used "in production", you might want to have another one for testing. In the event you accidentally expose the credentials for the testing bot password, you could revoke those credentials without affecting the production bot password. However, for most purposes it would probably make sense to just have the "bot name" be the same as your username.

After entering the name, click the Create button.

On the next page, select the rights that you want to grant to this bot password. I think the important ones are Edit existing pages, Create, edit, and move pages, and Delete pages, revisions, and log entries. However, just in case, I also selected High-volume editing, and View deleted files and pages as well. Leave the rest of the options at their defaults and click the Create button.

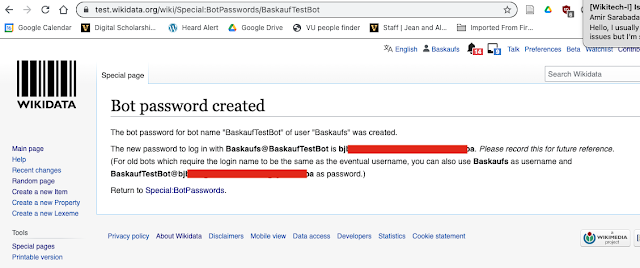

The resulting page will give you the username and passwords that you will need to write to the API. There are two variants: one where the bot name is appended to your username by @, and another where the username is used alone and the bot name is prepended to the password by @. We will use the first variant (username@botname).

You need to create a plain text file that contains the username and password. To do this, you should use a text or code editor and NOT a word processor like Microsoft Word. Your computer should have a built-in text editor (TextEdit for Mac, Notepad for Windows). If you don't know what text and code editor are, see the first three videos on

this page. If you are using a Mac, the second video explains how to ensure that TextEdit saves your file as plain text rather as rich text (which will cause an error in our situation) and to ensure that files are opened and closed using UTF-8 character encoding.

Open a new document in the text editor. Create three lines of text similar to this:

endpointUrl=https://test.wikidata.org

username=User@bot

password=465jli90dslhgoiuhsaoi9s0sj5ki3lo

Be careful, since mistyping any character will cause VanderBot to not work. It's best to copy and paste rather than to try to type the credentials. (These are fake credentials, so you can't actually use them -- use your own username and password.) Do not leave a space between the equals sign (=) and the other characters. The first line specifies that we are going to use the test.wikidata.org API, so you can copy it exactly as written above. The username is the login name that includes the @ symbol (Baskaufs@BaskaufTestBot in the example above). The password is the password version that does not have the @ symbol in it. Double check that when you copied the username and password, you did not leave any characters off. Also, put the cursor at the end of each line and make sure that there are no trailing spaces after the text on the line. It does not matter whether the last line is followed with a newline (hard return) or not.

When you have entered the text, save the file as

wikibase_credentials.txt in your home directory. In the next post, we will see how to use a different name or to change the location to somewhere else. Make sure that there is an underscore between "wikibase" and "credentials", not a dash or a space. If you do not know what your home directory is, or where it is located on your computer, see the

Special directories in Windows section of

this page or the

Special directories on Mac section of

this page. In Finder on a Mac, you can select

Home from the

Go menu to get there. In Windows File Explorer, start at the

c: drive, then navigate to the

Users folder. Your user folder will will be within the

Users folder and have the same name as your username on the computer.

Preparing the metadata description file and CSV headers

The VanderBot API upload script uses CSV files as its data source. Each row in the table represents data about an item. The columns of the table represent various aspects of metadata about the items, such as statements, qualifiers, and references. In order to transfer the data from the CSV to the Wikidata API, the columns of the CSV spreadsheet need to be mapped to the Wikibase data model (the model used by Wikidata). Since the Wikibase model can be represented as RDF, the

W3C Generating RDF from Tabular Data on the Web Recommendation can be used to systematically map the CSV columns to the Wikibase model. The VanderBot script uses that mapping to determine how to construct the JSON required to transfer the CSV data to the Wikidata API.

Initially, I constructed the mapping file (known as the CSV's "metadata description file") by hand while referring to the W3C Recommendation and its examples. However, it is extremely difficult to build the mapping file by hand without making errors that were difficult to detect. Fortunately, my collaborator, Jessie Baskauf, created a web tool that allows a user to construct the mapping file using drop-downs that are organized in a structure that reflects that of the Wikidata graphical user interface. We will use that tool to create both the mapping file and the CSV header whose field names correspond to those used in the mapping file.

The tool itself can be accessed online from

this link. The Javascript that runs the tool runs entirely within the web browser, so it can be used offline by downloading the

HTML,

CSS, and

Javascript files from GitHub into the same directory, then opening the HTML file in a browser.

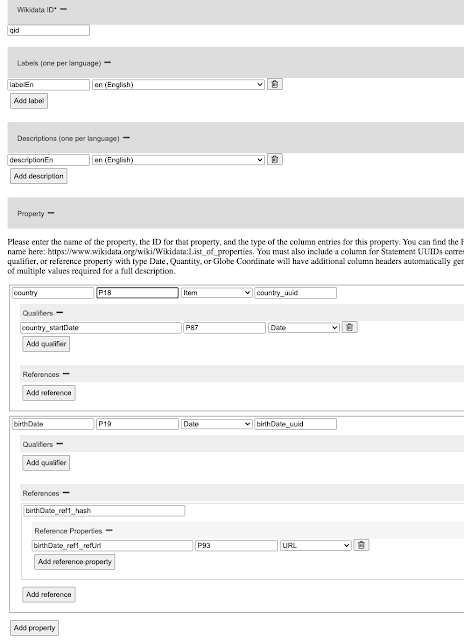

On the tool page, leave the Wikidata ID field at its default, qid. Use the Add label and Add description buttons to enter the names of each of those fields. I have been using the convention labelEn, labelDe, descriptionEs, etc. where I use lower camelCase and append the language code. However, you can use any name that makes sense to you. Select the appropriate language codes from the dropdown.

One thing to note is that there is no correspondence between property and item identifiers in test.wikidata.org (the test Wikidata implementation) and www.wikidata.org (the real Wikidata). So before we can add properties and item values of those properties, we need to look in the test Wikidata site to find properties and items that we want to play with. From the

https://test.wikidata.org/ landing page, select

Special pages from the left pane as you did before.

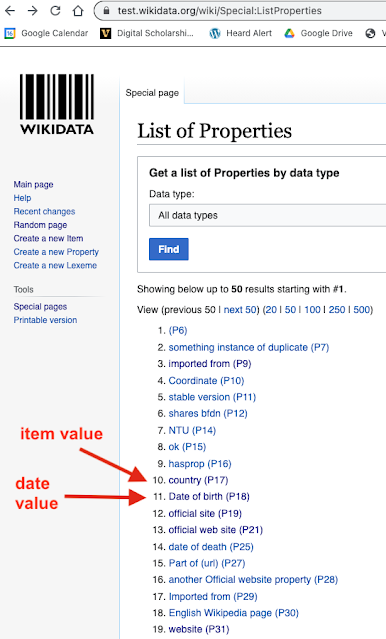

Near the bottom of the Special pages page in the Wikibase section, click on the List of Properties link. In the real Wikidata instance, creation of properties is controlled by a community process. In the test Wikidata instance anyone can create, change, or delete properties. So although the properties used in this example may still be the same when you do this exercise, they also may have changed. Since we are practicing, you can substitute any other similar property for the ones shown in the examples. We want to chose a couple of properties that have different kinds of values in order to see how that affects the mapping file and CSV headers. So we are looking for a property that has an Item value and one that has a Point in time value.

I picked P17 (country) and P18 (Date of birth) to use in the practice example. Clicking on the links shows that P17 has an Item value and P18 has a Point in time value.

There are not necessarily items in the test Wikidata instance that correspond to those in the real Wikidata, so I searched for some countries to use as values of P17 in the test. I found Q346 (France) and Q53079 (Mexico). You can find your own, or create new items to use if you want.

I also wanted to select a property to use for a qualifier and another one to use for a reference. In the real Wikidata instance, many properties have constraints that indicate whether they are suitable to be used as properties in statements, qualifiers, or references. In the test Wikidata instance, most properties don't have any constraints. So I just picked a couple that seemed to make sense. I chose P87 (start date, having a Point in time value) as a qualifier property for P17 (country). (What does that mean? I don't know and it doesn't matter -- this is just a test.) I chose P93 (reference URL, having a URL value) as a reference property for P18 (Date of birth). Here is a summary of my chosen entities:

P17 country (Item value, used as a statement property)

P87 start date (Point in time value, used as a qualifier property for P17)

Q346 France (Item, used as a value for P17)

Q53079 Mexico (Item, used as a value for P17)

P18 Date of birth (Point in time value, used as a statement property)

P93 reference URL (URL value, used as a reference property)

Using the buttons and drop-downs, I selected the properties listed above on the web tool.

The field names that you choose for the properties used in statements can be whatever you want. It is best to keep them short and do NOT use spaces. If you use multi-word names, I recommend lower camelCase, since dashes may cause problems later on and underscores are used by the tool to indicate the hierarchy of qualifier and reference properties. The fields ending in

_uuid and

_hash are for statement and reference identifier fields and you should leave them at their defaults. When you create statement properties, by default the tool prefixes qualifier and reference properties their parent statement property names followed by an underscore. You can change these to shorten them if you want, but it's probably best to leave them at their defaults since when CSVs have many columns it becomes difficult to remember the structure without the prefixes.

A statement can have multiple qualifier properties, but a statement can have both multiple references and multiple properties within a reference. For simplicity's sake, I recommend sticking with a single reference having one or more properties.

Using the drop-downs, be sure to select a value type that is appropriate for the property. There is no quality control here at the point of the tool, but an error will be generated when writing to the API if the selected value type does not match with the value type specified for the property on the property page of the test Wikidata instance.

After you have entered all of the property information, scroll to the bottom and enter a filename in the box. Click on the Create CSV button. At this time, the script isn't sophisticated enough to actually generate the CSV file. (That is a possible future feature.) Rather it generates the header line for the CSV as raw text. Click the Copy to clipboard button, then open a new file using the same text editor that you used to generate the credentials file.

Paste the copied text into the new file window.

Select Save or Save As... from an appropriate menu on your editor. The exact appearance of the dialog window will depend on your editor. The screenshot above is for TextEdit on a Mac. Be sure that you use exactly the same file name as you entered in the filename box in the web tool, with a .csv file extension. If your editor gives you a choice of text encoding, be sure to choose UTF-8. The directory into which you save the CSV file will be the one from which you will be running the upload script using the command line. So it is best to save it in some folder that is a subfolder of your home folder. Generally, Downloads, Documents, and Desktop are directly below the home folder, so if you use a subfolder of one of those folders, you should be able to navigate to that folder easily using the command line.

Now click the Create JSON button.

The metadata description JSON for the CSV file columns that you set up will be generated on the screen below the button. Click the Copy to clipboard button. Open a new file in the text editor that you used before and paste the copied text into it. Save the file using the name csv-metadata.json in the same directory where you saved the CSV file.

I like to paste the JSON into my favorite code editor (VS Code) because it will validate the JSON and display it using syntax highlighting, but that isn't really any better than using a vanilla text editor.

Preparing data to create new items

Now we will open the CSV file to add the data that we want to write to the test Wikidata instance. For this practice exercise, you can use Excel to edit the CSV if that is all you have, but if you are serious about using this system in the future, I highly recommend downloading and installing LibreOffice and using its Calc application to open and edit CSVs. I explain the reasons for this in the Skills required section at the top of this post. You can probably just double-click on the file in your file handling system (Finder on Mac or File Explorer in Windows), but if that doesn't work, open your spreadsheet application and open the file via Open in the File menu.

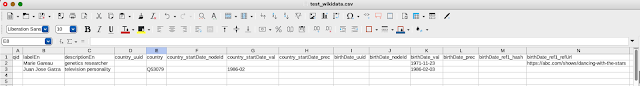

When you open the CSV file, it should appear as a spreadsheet with the column names in the order that you created them with the web tool with empty rows below. You can now add data in the rows below the header.

The screenshot of my example above is too small to easily see, but you can get a better look at it by going to this

GitHub gist. You must use different labels and descriptions from the ones I used because if you use the same ones, the API will not allow them to be written (more details about this in the next blog post). As values for the country column, you can use the Q ID of any item. (I used Q53079 for Mexico). Notice that the

birthDate column does not have a prefix, indicating that it is a statement property and not a child property of something else. The

startDate column was prefixed with

country_ by the web tool. That prefix and its position following the

country column are clues that this column is a qualifier for the

country column. The

refUri column was prefixed by the web tool with

birthDate_ and

ref1_, indicating that it is a property of the first reference for the birthDate statement. Because the value type of the

birthDate_ref1_refUrl column is URL, it must be a valid IRI starting with either

http:// or

https://.

The two date fields are a more complicated type. Dates, globe coordinates, and quantities are complex data types that cannot be represented by single fields. In the case of dates, they require one column field for the date string and another column filed to indicate the precision of the date (e.g. to year, to month, to century, etc.). There is a somewhat complicated system for representing dates in the Wikibase model (see

this page for details). Fortunately, the VanderBot script will automatically convert dates that are formatted according to its conventions into the format required by the API. Those conventions are:

character pattern example precision

----------------- ------- ---------

YYYY 1885 to year

YYYY-MM 2020-03 to month

YYYY-MM-DD 2001-09-11 to day

In the example spreadsheet, the country_startDate_val date value for the second item has precision to month, while the birthDate_val date values have precision to day.

The dates should be placed in the corresponding column with name ending in _val. The script knows that it should make the conversion when the corresponding column with name ending in _prec is empty. If the year has fewer than four digits, is BCE (a negative number), or has a precision lower than year (century, millennium, etc.), then a date string and precision integer properly formatted according to the Wikibase model must be provided explicitly. The script only provides minimal format checking (for the correct number of characters), so dates that are otherwise incorrectly formatted will result in an error that prevents the record from being written to the API.

You should also notice that the example spreadsheet has a number of empty columns. These columns will contain identifiers for the various entities described by the data columns. For example, the qid column will contain the identifier for the item. The country_uuid and birthDate_uuid columns will contain the identifiers for the country and birthDate statements. The birthDate_ref1_hash will contain the identifier for the first reference for birthDate, which contains a reference URL. In all of these cases, the Wikidata API will assign those identifiers when the various entities are created and they will be recorded in the CSV file immediately after the item has been created. The VanderBot script uses the presence or absence of these identifiers to know whether the particular identified entity exists and therefore whether it needs to be written to the API or not.

The situation with the two date columns whose names end in _nodeId is complicated. For technical reasons that I don't want to get into in this post, the node ID values are not assigned by the API, but rather are generated by VanderBot at the point of processing the dates. This is true for all of the properties with node value types (dates, globe coordinates, and quantities). All you need to know is that you should leave the columns ending in _nodeId blank and that the sets of three date-related columns that have the same first part (country_startDate_nodeId, country_startDate_val, and country_startDate_prec; birthDate_nodeId, birthDate_val, and birthDate_prec) represent complex values that can't be represented by a single column.

Note that I did not fill in every cell in the table that could contain values. I did that because in a later step we will practice adding values to the item statements and references after the items have already been created.

Be sure to close the CSV file before continuing to the next step. Failure to close the CSV will have different effects depending on the spreadsheet program you are using. I believe that both Excel and Open Office Calc place a lock on the file so that when the VanderBot script tries to write the API responses to the CSV file, it generates an error and crashes the script. Libre Office Calc will allow the changes to be written to the CSV file, but they will not show up unless the file is closed and re-opened. Libre Office Calc will warn you if you try to save an open file if it has been changed by the script while the file was open. In that case, close the file without saving and re-open it to see the changes.

Creating new items using the API

The last thing you need to have to actually write data to the API is the VanderBot Python script itself. Go to the

code page on GitHub. Right-click on the

Raw button in the upper right of the page. Select Save Link As..., navigate to the directory where you saved the

csv-metadata.json file and the CSV file that you edited, and save the the

vanderbot.py script there.

If you have not previously installed the requests library, you may need to do that before you can run the script. If you have Anaconda installed on your computer, requests may already be installed. If you aren't sure, just try running the script as described below. If you get an error message saying that Python doesn't know about requests, then try entering:

pip install requests

If that doesn't work, try

pip3 install requests

If you use some non-standard package manager like brew or conda, install requests by whatever means you normally install packages.

Open the appropriate console program for your operating system (probably Terminal for Mac or Command Prompt for Windows). Use the cd command to navigate to the directory where you saved the file, then list the files to make sure you are in the right place and the files are all there (ls for Mac Linux or dir for Windows DOS).

Depending on how you set up Python the command to run the script will probably either be

python vanderbot.py

or

python3 vanderbot.py

If things work correctly, the console should show the progress of writing to the API.

The first part of the output shows how VanderBot is interpreting the columns of the CSV based on the information from the csv-metadata.json column-mapping file. Then there is an indication that dates have been converted to the form required by the Wikibase model. As the script writes each row to the API, it displays the response of the API. The contents of the response don't matter as long as the end of the response contains "success". After writing statements for each row, the script then checks whether there were any existing statements with added references. Since there were none, nothing was reported. Finally, there is a report of any errors that occurred that prevented particular rows from being written. Not every possible type of error is trapped and some will result in the script terminating before finishing all of the rows of the CSV. In that situation, the last response from the API may give clues about what went wrong. All information about identifiers received from the API prior to termination of the script should be saved in the CSV file, so once the error is fixed, you can just run the script again to try again to write the problematic line.

If you re-open the CSV file, you should see results similar to

this gist. All of the identifier columns in the table that are associated with value columns have now been filled in, indicating that those data now exist on Wikidata. Notice also that the dates have been converted into the more complicated format.

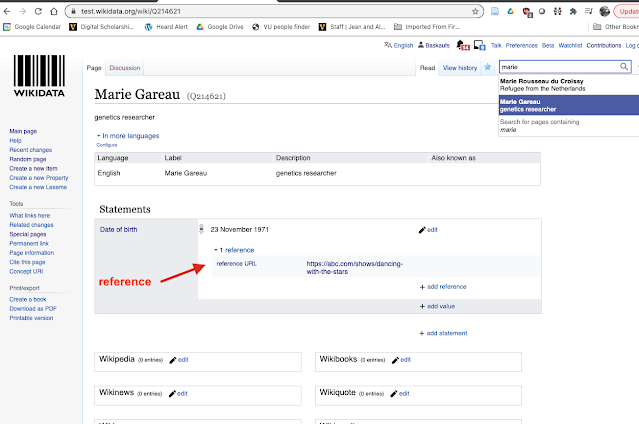

If you search the test.wikidata.org site, you should see the record for the new item that you created. Because the birthDate_ref1_refUrl column had a value, a reference was created for the Date of birth statement.

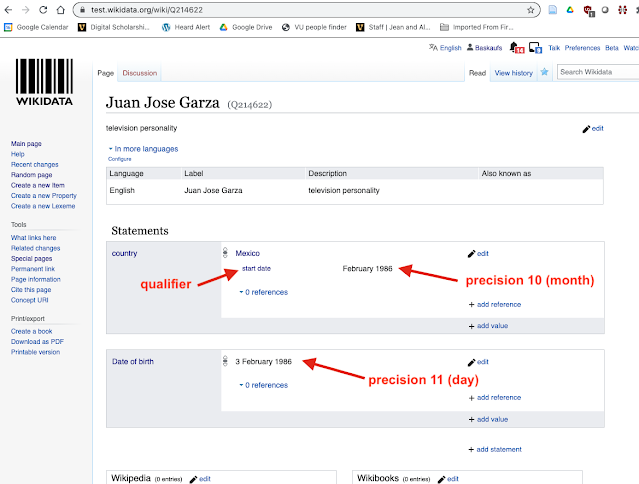

Because in the second row the country property column was followed by value columns for country_startDate that contained data, the country statement on the web page for the item displays a start date qualifier. The country_startDate_val column contained a value in the form 1986-02, so a precision of 10 (to month) was placed in the country_startDate_prec column of the table and therefore only the month is shown on the web page. In contrast, the birthDate_val column was given a value of 1982-02-03, so it was assigned a precision of 11 (to day) and the day is displayed on the web page for the date of birth statement.

Editing existing items using the API

We can add information to the two new items that we just created by filling in parts of the CSV that we left blank before. In

this gist, I added a country value (Q346, France) for item Q214621 and I added a reference value in the

birthDate_ref1_refUrl for the birth date statement, which already existed, but did not previously have any references. After making sure that I closed the CSV file in the spreadsheet program, I ran the VanderBot script again.

In the first section of the output, the script detected that it needed to add a statement to an existing item (there was already a value in the qid column, but there was a value in the country column without a corresponding identifier value in the country_uuid column). It found no statements to add in the second row, so it did nothing.

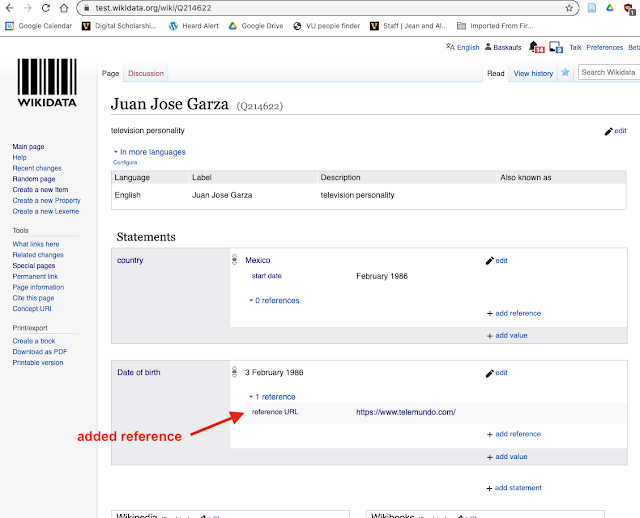

When it went through each row looking for new references for existing statements, it found one for the birth date reference URL column for the second record (there was an identifier in the birthDate_uuid column, but no identifier in the birthDate_ref1_hash column). It attempted to write the new data to the API, but an interesting thing happened. The server was too busy, and sent a message back to the script that it should wait a while and try again. In general, the script will keep trying with an increasing delay of up to 5 minutes, giving up after 10 tries. In this particular case, on the second retry the server was no longer too busy and the reference was successfully added.

When I reload the Juan Jose Garza page, I see that the date of birth statement now has a reference where there was none before.

Reloading Marie Gareau's page shows the new country statement. You may have noticed that I filled in the country value without giving any start date qualifier value for the statement in the country_startDate_val column. The Wikidata Query Service treats qualifiers and references differently in that it assigns IRI identifiers to references, but does not assign them to qualifiers. Because VanderBot is designed to get information about specific metadata about items using the Query Service, it does not capture and store any identifier for qualifiers. Thus it is currently not possible to add a qualifier to a statement once the statement has been created. This behavior may be modified at some point in the future, but for now you should be aware of that limitation.

Who's responsible for what just happened?

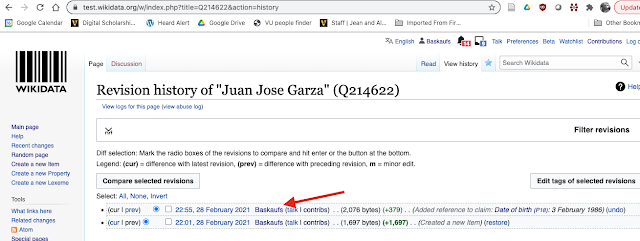

We can check the revision history of the Juan Jose Garza page to see how the edits we made were recorded.

Notice that since the edits were made by a script using a bot password associated with my user account (Baskaufs), the edits were credited to me just as if I had made them by hand using the graphical interface. One difference is that the original item was created using a single API interaction. So even though it involved creating a label, a description, and two statements, it was recorded as a single edit instead of four.

The benefit of editing as many parts of the item metadata at once as possible is that the interactions with the API are the rate-limiting factor when writing data to the API. VanderBot only makes one API call per row, even if the row contains many more columns than in this simple example. So it can make the edits much faster all at once than it could if it did them all separately.

Notice also that there is no record here that the VanderBot script was used. It identified itself to the API through its User-Agent HTTP header when it communicated with the server, and it was a "good citizen" by waiting to retry when the server reported that it was lagged. But there is no record of that interaction in the revision history.

To see the final state of the CSV file after all of the uploads shown here, see

this gist.

What should you do next?

While you have the spreadsheet and JSON metadata description file set up, you should do a lot more experimenting. There is really nothing that you can "break" on either test.wikidata.org or VanderBot. In particular, you should try doing the following things to see what happens. Some of them are "wrong" things that produce bad results or aren't allowed by the API, while others are harmless or fine. If the script doesn't crash, reload the page or search for the new item in the graphical interface to see what happened.

- Create another row in the spreadsheet where the label and description are the same as an existing item. What happens? (When writing to the "real" Wikidata, there is code in VanderBot that tries to prevent this, but it doesn't work with the test instance.)

- What happens if either the label or the description (but not both) is the same as an existing item? Can you create an item that is missing either a label or a description?

- What happens if you delete the uuid identifier for a statement property and run the script again?

- What happens if you delete the uuid identifier for a statement property, change the value, and run the script again?

- What happens if you delete a reference hash identifier and replace the reference value with a different one?

- What happens if you leave off part of a date (e.g. 1997-9-23 with no leading zero for the month)? You may need to change the cell format to "text" in order to be able to make this mistake in your spreadsheet program.

- What happens if you have the correct number of characters in a date, but the date is malformed (e.g. 199x-09-23)?

- What happens if you change a value, but do not delete the corresponding identifier associated with the value?

In the

next blog post, we will switch to writing to the "real" Wikidata, where we would prefer not to make these kinds of mistakes.

Answers are below.

.

,

,

,

,

,

,

,

,

,

,

,

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Answers:

- The API responds with an error message and the script ends prematurely.

- A new item will be created with the same label (or description). You can also create items lacking either a label or a description (but not both).

- A duplicate statement will be created. This is a bad practice.

- A second value will be added for the property. This is perfectly fine as long as the second value is correct information.

- The new reference gets added as a second reference for the same statement. This is perfectly fine.

- The script does nothing and reports that there was an incorrectly formatted date in that row.

- The script tries to write the value, but the API returns an error message saying that the date format is bad. The script then stops running.

- Nothing happens. The script doesn't look at the value if it already has an identifier associated with it.

No comments:

Post a Comment