The Rod Page Challenge, one more time

I started this series of blog posts by talking about the Rod Page Challenge, stated succinctly in October 2011 as: "What new things have we learnt about biodiversity by converting biodiversity data into RDF?"[1] I then blathered on for a number of blog posts about what it means for a semantic client to "learn", unintended consequences caused by careless use of terms having entailments, and the sorts of machine "learning" that don't really result in anything useful (triple "bloating" caused by the failure to reuse terms and identifiers and the "discovery" of lots of triples that don't actually help you answer the kinds of questions that interest you). I promised to revisit the Rod Page Challenge, which I modified into this form: "What new things have humans learnt about biodiversity by converting biodiversity data into RDF? What interesting new inferences have semantic clients made using RDF?".

I should start by confessing that in the interest of haranguing, I failed to mention in my previous posts that Rod's challenge was met within a few days by Cam Web.[2] You can see Cam's approach on a wiki page in the TDWG RDF/OWL Task Group's Google Code site (at least until Google Code goes down - when it does, check GitHub for the RDF TG's new home). Cam set up some triplestores queriable via SPARQL (unfortunately those triplestores are currently down), loaded the datasets (http://dx.doi.org/10.5281/zenodo.20990) that Rod provided, and created two SPARQL queries that demonstrated some form of machine "learning" from the datasets. (Note on 2015-07-22: Rod has loaded the triples where they can be queried at http://dydra.com/rdmpage/tdwg-challenge/@query#reflections-table. Cam's first query [5] can be run there.)

The first query demonstrated machine "learning" of the first sort: increasing the number of triples relevant to a particular resource by pooling triples from several independent, but linked datasets. In his example, he found all of the species in the genus Bufo according to UniProt. He then used linked data from GenBank and Wikipedia (via DBpedia) to find the conservation status of the species and geocoordinates of occurrences. One could call this a sort of victory for the "Linked Data" approach.

The second query demonstrated machine "learning" of the second sort: materializing entailed triples that have not previously been stated explicitly by anyone. In his example, the rdfs inferencing capabilities of the 4sr reasoner associated with one of the triplestores was used to infer unstated triples based on rdfs:subClassOf entailments. One could call this a sort of victory for the "Semantic Web" approach.

So should Cam win the esteemed prize [3] for meeting the challenge? One could say that easily associating species' names with reported geocoordinates and the conservation status of the species is a "learned" thing that wouldn't be obvious to a human. It's cool that this was "learned" using RDF data from different "silos" (although one could also probably find out the same information from a single data provider like GBIF that uses conventional database tools). It is also possible that Cam's first query could be hacked a bit to satisfy some of the other questions Rod asked in his blog ("what is the conservation status of frogs sequenced in Genbank?, is there correlation between the conservation status of a frog and the date it was discovered?, who has described the most frog species?, etc."). Perhaps we should give Cam a thumbs-up for the prize based on this query. The second query resulted in inferences, but I'm not sure they would qualify as "interesting" since the subclass relationships it materializes can be seen by going to the webpage that is displayed when http://www.uniprot.org/taxonomy/651673 is dereferenced in a browser and clicking on the "All lower taxonomy nodes" link. So I probably would give a thumbs down on that one and call it a rather hollow victory for the Semantic Web. I suppose Rod should be the ultimate judge on whether to award Cam the prize, but I'm not aware that Rod ever made a judgment about Cam's entry.

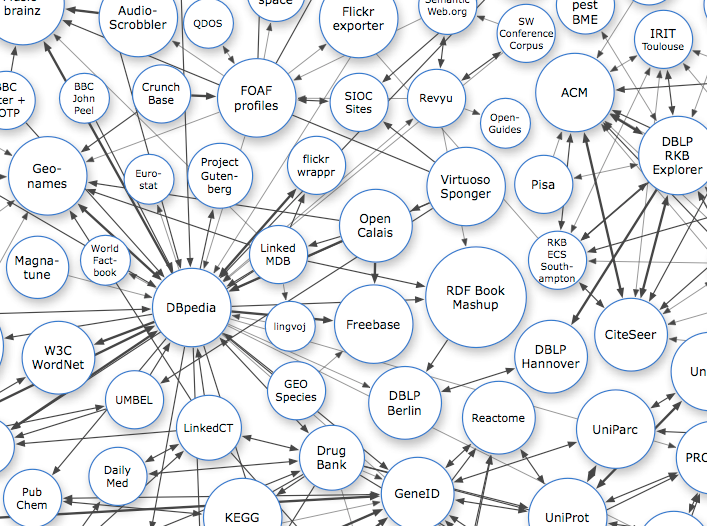

Image from http://linkeddata.org/ CC By-SA

A hollow victory for Linked Data?

A key point of Rod's "Challenge" blog post was:

My contention is that actually we can't do any of this because the data is siloed due to the lack of shared identifiers and vocabularies (I suspect that there is not a single identifier any of these files share). The only way we can currently link these data sets together is by shared string literals (e.g., taxonomic names), in which case why bother with RDF?

In Cam's first query, the "glue" that linked the UniProt data to the GenBank data was the triple pattern:

?gb dcterms:subject ?sp .

which was satisfied because the bio2rdf.org RDF descriptions contained triples like:

http://bio2rdf.org/genbank:EU566842 dcterms:subject <http://purl.uniprot.org/taxonomy/8354>.

I'm not sure how that relationship was established - the GenBank record for EU566842 itself doesn't seem to contain any link to the UniProt IRI. Does bio2rdf use string matching to make this link?

In Cam's first query, the "glue" that linked the UniProt data to Wikipedia was the triple pattern:

?sp rdfs:seeAlso ?dbp .

which was satisfied because of triples like:

<http://purl.uniprot.org/taxonomy/888540> rdfs:seeAlso <http://dbpedia.org/resource/Telmatobius_yuracare>.

That particular set of triples was actually generated by Rod himself in the linkout.rdf file that he provided to give a boost to the challenge. The file was generated based on mapping in "iPhilo LinkOut", a project described in http://dx.doi.org/10.1371/currents.RRN1228 . Examination of the iPhilo methods gives: "The initial mapping was constructed by extracting the scientific name of the taxon that was the topic of each Wikipedia page, then finding a match for this in the NCBI taxonomy database." Aaaargh! Linked Data fails here - it's really all just string matching.

"male" = "M" = "M." = "macho" = "M +" = ... ?

What's so bad about string matching?

In my last blog post, I described datatype properties (properties having string literals as their values) as a sort of "dead end". This is because currently, you aren't allowed to use literals as the subjects of triples. So if a machine encountered the triple:

http://bioimages.vanderbilt.edu/ind-baskauf/14750#2002-08-05baskauf dwc:genus "Argemone".

it could not try to "learn" more about the properties of the literal "Argemone" by dereferencing it (it's not a IRI), nor through a SPARQL query like:

DESCRIBE "Argemone" WHERE {

"Argemone" ?predicate ?object.

}

(literals are not allowed in the subject position of an RDF triple).

To some extent, you could get around this by setting up SPARQL queries where two triple patterns share a common literal object, like this:

?resource1 dwc:genus ?genus.

?resource2 ?property ?genus.

where ?genus could have a value of a literal like "Argemone". If ?resource1 were http://bioimages.vanderbilt.edu/ind-baskauf/14750#2002-08-05baskauf, I guess a machine could investigate ?resource2 and ?property and possibly "learn" something about "Argemone". However, this method depends on the literal that represents a certain resource having a consistent controlled value.

Let's suppose I'm interested in finding pairs of organisms that were established in a location by the same means. Darwin Core provides the term dwc:establishmentMeans (a literal-value property) for providing this kind of information.

I could run this query:

SELECT ?organism1 ?organism2 WHERE {

?organism1 dwc:establishmentMeans ?means.

?organism2 dwc:establishmentMeans ?means.

}

The query results would contain pairs of organisms that shared the same means of establishment. But what if organism1 has a dwc:establishmentMeans value of "naturalized" and organism2 has a dwc:establishmentMeans value of "naturalised"? No match. One could simply chalk this up to failure to use a consistent controlled vocabulary, but these kinds of things happen a lot even with what would seem like "simple" controlled vocabularies [4]. One could also argue that a similar misspelling could happen with a IRI like:

http://rs.gbif.org/vocabulary/gbif/establishment_means/naturalised

However, if users bother to find and reuse an appropriate IRI, they probably will go to the effort to make sure that they get it right (or acquire the IRI values using software rather than typing the value out of their head).

The other issue with using literal values to link resources is that you really don't need RDF to do it. Google's algorithms for linking things using strings are probably better than any literal-matching SPARQL queries that most people could come up with.

Agnostic to Believer???

"If there is something you know, communicate it. If there is something you don't know, search for it." — An engraving from the 1772 edition of the Encyclopédie; Truth, in the top center, is surrounded by light and unveiled by the figures to the right, Philosophy and Reason.

Image from an engraving by Benoît Louis Prévost, 1804. Public domain. Source: https://commons.wikimedia.org/wiki/File:Encyclopedie_frontispice_full.jpg

At the close of my previous blog post, in addition to revisiting the Rod Page Challenge, I also promised to talk about what it would take to turn me from an RDF Agnostic into an RDF Believer. I will recap the main points about what I think it will take in order for the Rod Page Challenge to REALLY be met (i.e. for machines to make interesting inferences and provide humans with information about biodiversity that would not be obvious otherwise):

1. Resource descriptions in RDF need to be rich in triples containing object properties that link to other IRI-identified resources.

2. "Discovery" of IRI-identified resources is more likely to lead to interesting information when the linked IRIs are from Internet domains controlled by different providers.

3. Materialized entailed triples do not necessarily lead to "learning" useful things. Materialized entailed triples are useful if they allow the construction of more clever or meaningful queries, or if they state relationships that would not be obvious to humans.

In the introduction to this post, I said that last year when I finished the previous blog post, I was pessimistic about the prospects for doing anything useful with RDF in the context of biodiversity informatics. One of the primary reasons that I was pessimistic was because the TDWG process for moving the Darwin Core (DwC) standard seemed to be hopelessly stuck. However, in October the confusion about the duplicate DwC classes was cleared up and the long-awaited (by me at least) dwc:Organism class was accepted. These changes made it possible to stabilize Darwin-SW, which is designed to facilitate object-property links between instances of all of the core classes of Darwin Core. Then in March, the DwC RDF Guide was officially added to the standard, making it possible for the first time to unambiguously create linkages to either literal or IRI-identified object resources using Darwin Core terms. The completion of the DwC RDF Guide and Darwin-SW makes it now possible (in my opinion) to satisfy point number 1 above.

Making it possible to create rich, object-property linkages doesn't necessarily mean that people will actually DO it. Point number 2 isn't going to happen until the biodiversity community settles on some mechanism for generating, exposing, and reusing IRI identifiers, preferably ones that dereference to return consistent RDF. This is a difficult task because it requires consensus-building and self-discipline on the part of data providers to reuse identifiers instead of constantly issuing new ones (and subsequently leading to triple "bloat"). So I'm still relatively pessimistic on that point.

On a small scale, completion of the DwC RDF Guide, stabilization of Darwin-SW, and the adoption of Audubon Core has made it possible for me to generate stable and standardized RDF representations of organisms and images in the Bioimages database. Bioimages organism and image IRIs dereference to RDF/XML (e.g. http://bioimages.vanderbilt.edu/vanderbilt/7-314.rdf) and all 1488578 triples in the database can be downloaded (file: bioimages-rdf.zip, 3.7 Mb; http://dx.doi.org/10.5281/zenodo.21009) from the Bioimages GitHub repo (https://github.com/baskaufs/Bioimages). A summer Heard Library Dean's Fellowship has made it possible for Suellen String-Hye and me to work with Sean King on setting up a triplestore and SPARQL endpoint that allows the Bioimages RDF data to be queried. The Callimachus-based endpoint is now live at http://rdf.library.vanderbilt.edu/sparql and loaded with the Bioimages triples. So the stage is set for experimenting to find out what kinds of SPARQL-based reasoning is practical with a real 1.7 M triple RDF dataset. After that experimentation, I'll know a little better how I feel about what is realistic to expect for point number 3.

So overall, I've moved incrementally along the line from RDF agnostic towards RDF believer. For me to become a true believer, we'll need to come up with datasets thousands of times larger than the Bioimages dataset, and to successfully conduct on those data reasoning that is computable in a reasonable amount of time and that results in materialized triples that are actually novel and interesting. That's a pretty tall order.

With that conclusion, I'll draw this series of blog posts to a close. I'm planning to start on some posts to report what I've learned from experimenting with the Bioimages triples via the new endpoint. So stay tuned...

[1] Rod has posted the challenge data at https://github.com/rdmpage/tdwg-challenge-rdf and loaded it into an endpoint if you want to play with it.

[2] See also Rod's followup blog post http://iphylo.blogspot.co.uk/2011/10/reflections-on-tdwg-rdf.html in which Rod posts a SPARQL query and shows a visualization of the frog data created by Olivier Rovellotti.

[3] An announcement by Rod in his blog (http://iphylo.blogspot.com/) that the challenge entry sucked.

[4] See https://soyouthinkyoucandigitize.wordpress.com/2013/07/18/data-diversity-of-the-week-sex/ for an interesting example.

[5] In the event that Google Code is gone when you read this, Cam's query was:

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#> PREFIX uni: <http://purl.uniprot.org/core/> PREFIX dcterms: <http://purl.org/dc/terms/> PREFIX dbp: <http://dbpedia.org/ontology/> PREFIX geo: <http://www.w3.org/2003/01/geo/wgs84_pos#> CONSTRUCT { ?gb dcterms:subject ?sp . ?sp uni:scientificName ?sn . ?sp rdfs:seeAlso ?dbp . ?dbp dbp:conservationStatus ?cons . ?gb dcterms:relation _:occ . _:occ geo:long ?long . _:occ geo:lat ?lat . } WHERE { # Where name is Bufo and rank is genus ?gen uni:scientificName "Bufo" . ?gen uni:rank uni:Genus . # go down two subclasses, check it's a species ?sect rdfs:subClassOf ?gen . ?sp rdfs:subClassOf ?sect . ?sp uni:rank uni:Species . ?sp uni:scientificName ?sn . # link to genbank and find those with occurrences ?gb dcterms:subject ?sp . ?gb dcterms:relation [ geo:long ?long ; geo:lat ?lat ] . # optionally, link to DBpedia and get the conservation status OPTIONAL { ?sp rdfs:seeAlso ?dbp . ?dbp dbp:conservationStatus ?cons . }

The second query was:

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#> PREFIX uni: <http://purl.uniprot.org/core/> SELECT ?n WHERE { ?s uni:scientificName ?n . ?s rdfs:subClassOf <http://purl.uniprot.org/taxonomy/651673> . }

However, reasoning of the entailed rdfs:subClassOf relationships was done automatically by a reasoner built in to Cam's triplestore, so if you run the query on a conventional SPARQL endpoint, you can't see the effect of reasoning caused by the materializing of additional entailed rdfs:subClassOf triples.

Hi Steve, interesting read, prompted me to dig out the data and archive it properly (as you've noted). I've also made a few comments of the "RDF is dead, blah, blah" variety on iPhylo http://iphylo.blogspot.co.uk/2015/07/steve-baskauf-on-rdf-and-page-challenge.html

ReplyDeleteYeah, thanks Rod - I enjoyed the post. The "JSON-LD and Why I hate the Semantic Web" blog post that you cited has been very influential on my thinking about Linked Data and how we need to be finding out what actually works rather than sticking with dogma. You also inspired me to generate a DOI for the Bioimages data release!

ReplyDeleteHi Steve, I just wanted to point you to our recent paper on machine reasoning over morphology. It's somewhat different from specimen records, but in the realm of biodiversity: http://dx.doi.org/10.1093/sysbio/syv031

ReplyDeleteCool, thanks for the link!

ReplyDelete