1. In order for the use of RDF to be beneficial, it needs to facilitate processing by semantic clients ("machines") that results in interesting inferences and information that would not otherwise be obvious to human users. We could call this the "non-triviality" problem.

2. Retrieving information that isn't obvious is more likely to happen if the RDF is rich in object properties [1] that link to other IRI-identified resources and if those linked IRIs are from Internet domains controlled by different providers. We could call this the "Linked Data" problem: we need to break down the silos that separate the datasets of different providers. We could reframe by saying that the problem to be solved is the lack of persistent, unique identifiers, lack of consensus object properties, and lack of the will for people to use and reuse those identifiers and properties.

3. RDF-enabled machine reasoning will be beneficial if the entailed triples allow the construction of more clever or meaningful queries, or if they state relationships that would not be obvious to humans. We could call this the "Semantic Web" problem.

In that second post, I used the "shiny new toys" (Darwin-SW 0.4, the Bioimages RDF dataset, and the Heard Library Public SPARQL endpoint) to play around with the Semantic Web issue by using SPARQL to materialize entailed inverse relationships. In this post, I'm going to play around with the Linked Data issue by trying to add an RDF dataset from a different provider to the Heard Library triplestore and see what we can do with it.

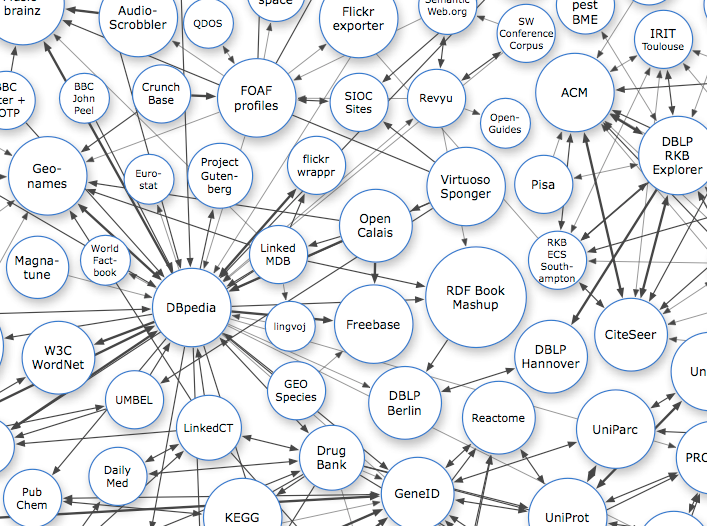

Image from http://linkeddata.org/ CC BY-SA

Linked Data

I'm not going to attempt to review the philosophy and intricacies of Linked Data. Both http://linkeddata.org/ and the W3C have introductions to Linked Data. To summarize, here's what I think of when I hear the term "Linked Data":

1. Things (known as "resources" in RDF lingo) are identified by HTTP IRIs.

2. Those things are described by some kind of machine-processable data.

2. Those data link the thing to other things that are also identified by HTTP IRIs.

4. If a machine doesn't know about some other linked thing, it can dereference that thing's HTTP IRI in order to retrieve the machine-processable data about the other thing via the Internet.

This may be an oversimplification that is short on technical details, but I think it catches the main points.

Some notes:

1. IRIs are a superset of URIs. See https://www.ietf.org/rfc/rfc3987.txt for details. In this post, you could pretty much substitute "URI" anytime I use "IRI" if you wanted to.

2. "Machine-processable data" might mean that the data are described using RDF, but that isn't a requirement - there are other ways that data can be described and encoded, including Microdata and JSON-LD that are similar to, but not synonymous with RDF.

3. It is possible to link to other things without using RDF triples. For example, in its head element, an HTML web page can have a link tag with a rel="meta" attribute that links to some other document. It is possible that a machine would be able to "follow it's nose" to find other information using that link.

4. RDF does not require that the things be identified by IRIs that begin with "HTTP://" (i.e. HTTP IRIs). But if they aren't, it becomes difficult to use HTTP and the Internet to retrieve the information about a linked thing.

In this blog post, I'm going to narrow the scope of Linked Data as it is applied in my new toys:

1. All IRIs are HTTP IRIs.

2. Resources are described as RDF.

3. Links are made via RDF triples.

4. The linked RDF is serialized within some document that can be retrieved via HTTP using the linked IRI.

What "external" links are included in the Bioimages RDF dataset?

In the previous two blog posts in this series, all of the SPARQL queries investigated resources whose descriptions were contained in the Bioimages dataset itself. However, the Bioimages dataset contains links to some resources that are described outside of that dataset:

- Places that are described by GeoNames.

- Taxonomic names that are described by uBio.

- Agents that are described by ORCID.

- Literature identified by DOIs that link to publisher descriptions.

In principle, a Linked Data client ("machine", an program designed to find, load, and interpret machine-processable data) could start with the Bioimages VoID description and follow links from there to discover and add to the triplestore all of the linked information, including information about the four kinds of resources described outside of Bioimages. Although that would be cool, it probably isn't practical for us to take that approach at the present. Instead, I would like to find a way to acquire relevant triples from a particular external provider, then add them to the triplestore manually.

The least complicated of the external data sources [1] to experiment with is probably GeoNames. GeoNames actually provides a data dump download service that can be used to download its entire dataset. Unfortunately, the form of that dump isn't RDF, so it would have to be converted to RDF. Its dataset also includes a very large number of records (10 million geographical names, although subsets can be downloaded). So I decided it would probably be more practical to just retrieve the RDF about particular geographic features that are linked from Bioimages.

Building a homemade Linked Data client

In the do-it-yourself spirit of this blog series, I decided to program my own primitive Linked Data client. As a summer father/daughter project, we've been teaching ourselves Python, so I decided this would be a good Python programming exercise. There are two reasons why I like using Python vs. other programming languages I've used in the past. One is that it's very easy to test your code experimentation interactively as you build it. The other is that somebody has probably already written a library that contains most of the functions that you need. After a miniscule amount of time Googling, I found the RDFLib library that provides functions for most common RDF-related tasks. I wanted to leverage the new Heard Library SPARQL endpoint, so I planned to use XML search results from the endpoint as a way to find the IRIs that I want to pull from GeoNames. Fortunately, Python has built-in functions for handling XML as well.

To scope out the size of the task, I created a SPARQL query that would retrieve the GeoName IRIs to which Bioimages links. The Darwin Core RDF Guide defines the term dwciri:inDescribedPlace that relates Location instances to geographic places. In Bioimages, each of the Location instances associated with Organism Occurrences are linked to GeoNames features using dwciri:inDescribedPlace. As in the earlier blog posts of this series, I'm providing a template SPARQL query that can be pasted into the blank box of the Heard Library endpoint:

PREFIX foaf: <http://xmlns.com/foaf/0.1/>

PREFIX ac: <http://rs.tdwg.org/ac/terms/>

PREFIX dwc: <http://rs.tdwg.org/dwc/terms/>

PREFIX dwciri: <http://rs.tdwg.org/dwc/iri/>

PREFIX dsw: <http://purl.org/dsw/>

PREFIX Iptc4xmpExt: <http://iptc.org/std/Iptc4xmpExt/2008-02-29/>

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX dc: <http://purl.org/dc/elements/1.1/>

PREFIX dcterms: <http://purl.org/dc/terms/>

PREFIX dcmitype: <http://purl.org/dc/dcmitype/>

SELECT DISTINCT ?place

FROM <http://rdf.library.vanderbilt.edu/bioimages/images.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/organisms.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/baskauf.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/thomas.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/uri.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/kirchoff.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/ncu-all.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/dsw.owl>

FROM <http://rdf.library.vanderbilt.edu/bioimages/dwcterms.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/local.rdf>

FROM <http://rdf.library.vanderbilt.edu/bioimages/stdview.rdf>

WHERE {

?location a dcterms:Location.

?location dwciri:inDescribedPlace ?place.

}

You can substitute other query forms in place of the SELECT DISTINCT line and other graph patterns for the WHERE clause in this template.

The graph pattern in the example above binds Location instances to a variable and then finds GeoNames features that are linked using dwciri:inDescribedPlace. Running this query finds the 185 unique references to GeoNames IRIs that fit the graph pattern. If you run the query using the Heard Library endpoint web interface, you will see a list of the IRIs. If you use a utility like cURL to run the query, you can save the results in an XML file like https://github.com/jbaskauf/python/blob/master/results.xml (which I've called "results.xml" in the Python script).

Now we have the raw materials to build the application. Here's the code required to parse the results XML from the file into a Python object:

import xml.etree.ElementTree as etree

tree = etree.parse('results.xml')

A particular result XML element in the file looks like this:

<result>

<binding name='place'>

<uri>http://sws.geonames.org/4617305/</uri>

</binding>

</result>

To put out all of the <uri> nodes, I used:

resultsArray=tree.findall('.//{http://www.w3.org/2005/sparql-results#}uri')

and to turn a particular <uri> node into a string, I used:

baseUri=resultsArray[fileIndex].text

In theory, this string is the GeoNames feature IRI that I would need to dereference to acquire the RDF about that feature. But content negotiation redirects the IRI that identifies the abstract feature to a similar IRI that ends in "about.rdf" and identifies the actual RDF/XML document file that is about the GeoNames feature. If I were programming a real semantic client, I'd have to be able to handle this kind of redirection and recognize HTTP response codes like 303 (SeeOther). But I'm a Python newbie and I don't have a lot of time to spend on the program, so I hacked it to generate the file IRI like this:

getUri=baseUri+"about.rdf"

RDFLib is so awesome - it has a single function that will make the HTTP request, receive the file from the server, and parse it:

result = addedGraph.parse(getUri)

It turns out that the Bioimages data has a bad link to a non-existent IRI:

http://sws.geonames.org/8504608/about.rdf

so I put the RDF parse code inside a try:/except:/else: error trap so that the program wouldn't crash if the server HTTP response was something other than 200.

In RDFLib it is also super-easy to do a UNION merge of two graphs. I merge the graph I just retrieved from GeoNames into the graph where I'm accumulating triples by:

builtGraph = builtGraph + addedGraph

When I'm done merging all of the data that I've retrieved from GeoNames, I serialize the graph I've built into RDF/XML and save it in the file "geonames.rdf":

s = builtGraph.serialize(destination='geonames.rdf', format='xml')

Here's what the whole script looks like:

import rdflib

import xml.etree.ElementTree as etree

tree = etree.parse('results.xml')

resultsArray=tree.findall('.//{http://www.w3.org/2005/sparql-results#}uri')

builtGraph=rdflib.Graph()

addedGraph=rdflib.Graph()

fileIndex=0

while fileIndex<len(resultsArray):

print(fileIndex)

baseUri=resultsArray[fileIndex].text

getUri=baseUri+"about.rdf"

try:

result = addedGraph.parse(getUri)

except:

print(getUri)

else:

builtGraph = builtGraph + addedGraph

fileIndex=fileIndex+1

s = builtGraph.serialize(destination='geonames.rdf', format='xml')

Voilà! a Linked Data client in 19 lines of code! You can get the raw code with extra annotations at

https://raw.githubusercontent.com/jbaskauf/python/master/geonamesTest.py.

The resultsin the "geonames.rdf" file are serialized in the typical, painful RDF/XML syntax:

<?xml version="1.0" encoding="UTF-8"?>

<rdf:RDF

xmlns:cc="http://creativecommons.org/ns#"

xmlns:dcterms="http://purl.org/dc/terms/"

xmlns:foaf="http://xmlns.com/foaf/0.1/"

xmlns:gn="http://www.geonames.org/ontology#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:wgs84_pos="http://www.w3.org/2003/01/geo/wgs84_pos#"

>

<rdf:Description rdf:about="http://sws.geonames.org/5417618/">

<gn:alternateName xml:lang="bpy">কলোরাডো</gn:alternateName>

<gn:alternateName xml:lang="eo">Kolorado</gn:alternateName>

<gn:countryCode>US</gn:countryCode>

<gn:alternateName xml:lang="mk">Колорадо</gn:alternateName>

<gn:alternateName xml:lang="he">קולורדו</gn:alternateName>

<gn:parentCountry rdf:resource="http://sws.geonames.org/6252001/"/>

<gn:name>Colorado</gn:name>

...

</rdf:Description>

...

</rdf:RDF>

but that's OK, because only a machine will be reading it. You can see that the data I've pulled from GeoNames provides me with information that didn't already exist in the Bioimages dataset, such as how to write "Colorado" in Cyrillic and Hebrew.

Ursus americanus occurrence in Parque Nacional Yellowstone in 1971: http://www.gbif.org/occurrence/930741907 Image (c) 2005 by James H. Bassett CC BY-NC-SA http://bioimages.vanderbilt.edu/bassettjh/jb261

Doing something fun with the new data

As exciting as it is to have built my own Linked Data client, it would be even more fun to use the scraped data to run some more interesting queries. The geonames.rdf triples have been added to the Heard Library triplestore and can be included in queries by adding the

FROM <http://rdf.library.vanderbilt.edu/bioimages/geonames.rdf>

clause to the template set I listed earlier and the gn: namespace abbreviation to the list:

PREFIX gn: <http://www.geonames.org/ontology#>

-----------------------------------------

OK, here is the first fun query. Find 20 GeoNames places that are linked to Locations in the Bioimages dataset, show their English names and name translations in Japanese. By looking at the RDF snippet above, you can see that the property gn:name is used to link the feature to its preferred (English?) name. The property gn:alternateName is used to link the feature to alternative names in other languages. So the triple patterns

?place gn:name ?placeName.

?place gn:alternateName ?langTagName.

can be added to the template query to find the linked names. However, we don't want ALL of the alternative names, just the ones in Japanese. For that, we need to add the filter

FILTER ( lang(?langTagName) = "ja" )

The whole query would be

SELECT DISTINCT ?placeName ?langTagName

WHERE {

?location a dcterms:Location.

?location dwciri:inDescribedPlace ?place.

?place gn:name ?placeName.

?place gn:alternateName ?langTagName.

FILTER ( lang(?langTagName) = "ja" )

}

LIMIT 20

Here are sample results:

| placeName | langTagName |

|---|---|

| Hawaii | ハワイ州@ja |

| Great Smoky Mountains National Park | グレート・スモーキー山脈国立公園@ja |

| Yellowstone National Park | イエローストーン国立公園@ja |

Other fun language tags to try include "ka", "bpy", and "ar".

---------------------------------------

Here is the second fun query. List species that are represented in the Bioimages database that are found in "Parque Nacional Yellowstone" (the Spanish name for Yellowstone National Park). Here's the query:

SELECT DISTINCT ?genus ?species

WHERE {

?determination dwc:genus ?genus.

?determination dwc:specificEpithet ?species.

?organism dsw:hasIdentification ?determination.

?organism dsw:hasOccurrence ?occurrence.

?occurrence dsw:atEvent ?event.

?event dsw:locatedAt ?location.

?location dwciri:inDescribedPlace ?place.

?place gn:alternateName "Parque Nacional Yellowstone"@es.

}

Most of this query could have done using only the Bioimages dataset without our Linked Data effort, but there is nothing in the Bioimages data that provides any information about place names in Spanish. Querying on that basis required triples from GeoNames. The results are:

| genus | species |

|---|---|

| Pinus | albicaulis |

| Ursus | americanus |

--------------------------------

Adding the GeoNames data to Bioimages enables more than alternative language representations for place names. Each feature is linked to its parent administrative feature (counties to states, states to countries, etc.), whose data could also be retrieved from GeoNames to build a geographic taxonomy that could be used to write smarter queries. Many of the geographic features are also linked to Wikipedia articles, so queries could be used to build web pages that showed images of organisms found in certain counties, along with a link to the Wikipedia article about the county.

Possible improvements to the homemade client

- Let the software make the query to the SPARQL endpoint itself instead of providing the downloaded file to the script. This is easily possible with the RDFLib Python library.

- Facilitate content negotiation by requesting an RDF content-type, then handle 303 redirects to the file containing the metadata. This would allow the actual resource IRI to be dereferenced without jury-rigging based on an ad hoc addition of "about.rdf" to the resource IRI.

- Search recursively for parent geographical features. If the retrieved file links to a parent resource that hasn't already been retrieved, retrieve the file about it, too. Keep doing that until there aren't any higher levels to be retrieved.

- Check with the SPARQL endpoint to find Location records that have changed (or are new) since some previous time, and retrieve only the features linked in that record. Check the existing GeoNames data to make sure the record hasn't already been retrieved.

So can we "learn" something using RDF?

Returning to the question posed at the beginning of this post, does retrieving data from GeoNames and pooling it with the Bioimages data address the "non-triviality problem"? In this example, it does provide useful information that wouldn't be obvious to human users (names in multiple languages). Does this qualify as "interesting"? Maybe not, since the translation could be obtained by pasting names into Google translate. But it is more convenient to let a computer do it.

To some extent, the "Linked Data problem" is solved in this case since there is now a standard, well-known property to do the linking (dwciri:inDescribedPlace) and a stable set of external IRI identifiers (the GeoNames IRIs) to link to . The will to do the linking was also there on the part of Bioimages and its image providers - the Bioimages image ingestion software we are currently testing makes it more convenient for users to easily make that link.

So on the bases of this example, I am going to give a definite "maybe" to the question "can we learn something useful using RDF".

I may write another post in this series if I can pull RDF data from some of the other RDF data sources (ORCID, uBio) and do something interesting with them.

--------------------------------------

[1] uBio doesn't actually use HTTP URIs, I can't get ORCID URIs to return RDF, and DOIs use redirection to a number of data providers.

It appears that ORCID is serving RDF, at least experimentally. If an ORCID ID is dereferenced using Accept: header "text/turtle" or "application/rdf+xml", RDF is returned via a temporary 307 redirect (vs. the preferred 303). I don't know how stable this is - there is a suggestion that the actual person have the IRI "http://orcid.org/0000-0003-0782-2704#person" vs. "http://orcid.org/0000-0003-0782-2704" as the RDF currently stands. See http://www.w3.org/2015/03/orcid-semantics for more.

ReplyDelete